Apple is famous for being protective of its projects and for expecting secrecy from its workers. Now, according to The Wall Street Journal, the tech giant is concerned about the possibility of its employees inadvertently leaking proprietary data while using ChatGPT. To prevent that scenario from happening, Apple has reportedly restricted the use of ChatGPT and other AI tools, such as GitHub's Copilot that can autocomplete code. The Journal also says that Apple is working on large language models of its own.

In early April, The Economist Korea reported that three Samsung employees shared confidential information with ChatGPT. Apparently, one employee asked the chatbot to check database source code for errors, while another asked it to optimize code. The third employee reportedly uploaded a recorded meeting onto the chatbot and asked it to write minutes. It's unclear how Apple is restricting the use of generative AI tools and if it's prohibiting their use completely. But in Samsung's case, the company restricted the length of employees' ChatGPT prompts to a kilobyte or 1024 characters of text.

Large language models like OpenAI become better the more people use them because users' interactions are sent back to developers for further training. ChatGPT's terms and conditions, for instance, state that conversations "may be reviewed by [its] AI trainers to improve [its] systems." For a secretive company like Apple, limiting their use doesn't come as a surprise. That said, OpenAI introduced a new privacy control setting in April that enables users to switch off their chat histories so that their conversations can't be used for training. The company made it available after it had to pull ChatGPT for a few hours due to a bug that showed users other people's chat histories.

Not much is known about Apple's LLM projects at the moment if there truly are any, but all its AI efforts are under the supervision of John Giannandrea, who used to lead Google's search and AI teams. The tech giant has also snapped up a number of AI startups over the past few years. When asked about AI in an interview recently, Apple chief Tim Cook hinted that the tech giant is taking a cautious approach by saying: "I do think it's very important to be deliberate and thoughtful in how you approach these things."

OpenAI is tightening up ChatGPT’s privacy controls. The company announced today that the AI chatbot’s users can now turn off their chat histories, preventing their input from being used for training data.

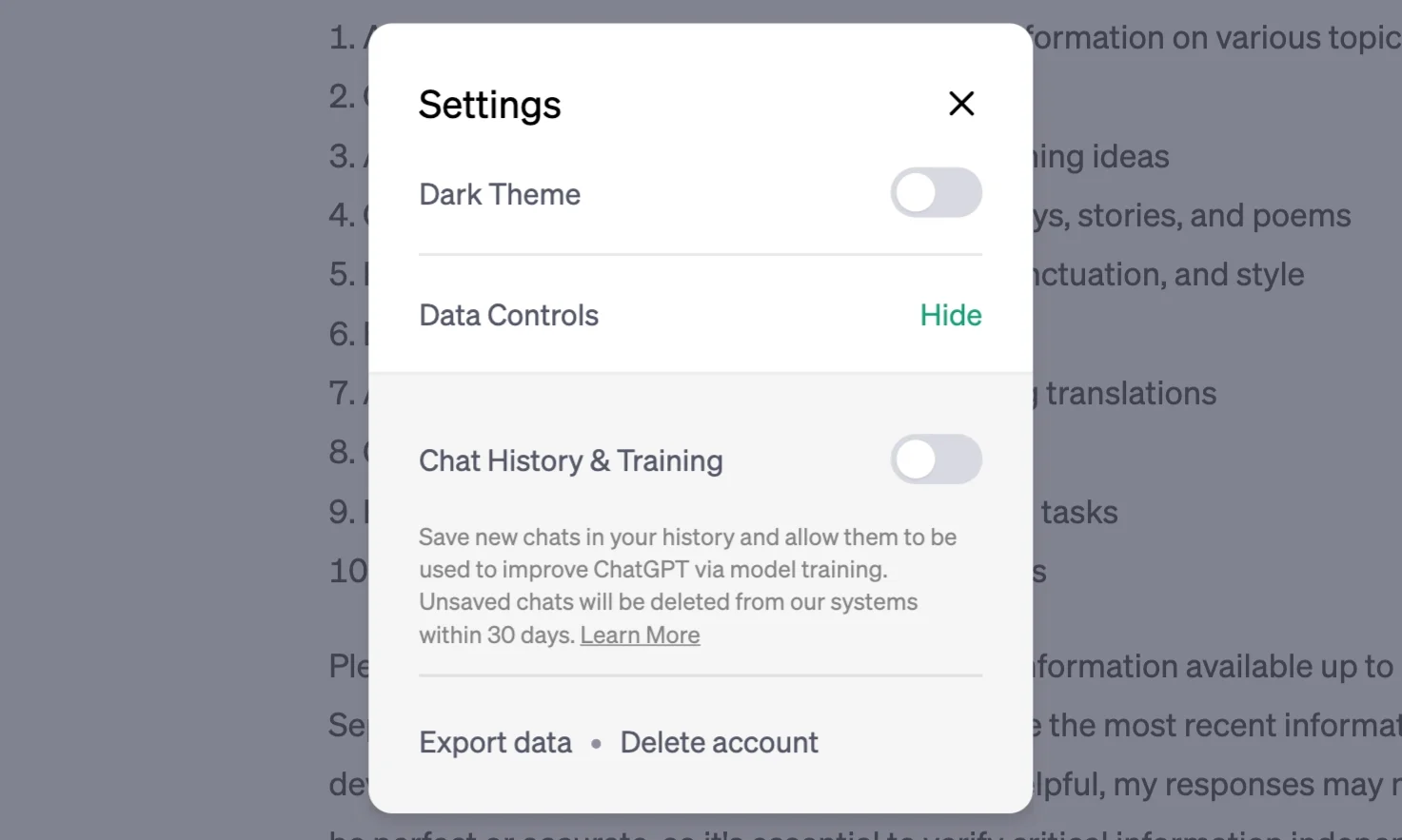

The controls, which roll out “starting today,” can be found under ChatGPT user settings under a new section labeled Data Controls. After toggling the switch off for “Chat History & Training,” you’ll no longer see your recent chats in the sidebar.

Even with the history and training turned off, OpenAI says it will still store your chats for 30 days. It does this to prevent abuse, with the company saying it will only review them if it needs to monitor them. After 30 days, the company says it permanently deletes them.

OpenAI also announced an upcoming ChatGPT Business subscription in addition to its $20 / month ChatGPT Plus plan. The Business variant targets “professionals who need more control over their data as well as enterprises seeking to manage their end users.” The new plan will follow the same data-usage policies as its API, meaning it won’t use your data for training by default. The plan will become available “in the coming months.”

Finally, the startup announced a new export option, letting you email yourself a copy of the data it stores. OpenAI says this will not only allow you to move your data elsewhere, but it can also help users understand what information it keeps.

Earlier this month, three Samsung employees were in the spotlight for leaking sensitive data to the chatbot, including recorded meeting notes. By default, OpenAI uses its customers’ prompts to train its models. The company urges its users not to share sensitive information with the bot, adding that it’s “not able to delete specific prompts from your history.” Given how quickly ChatGPT and other AI writing assistants blew up in recent months, it’s a welcome change for OpenAI to strengthen its privacy transparency and controls.