Apple is releasing a free software update that will inject its first dose of artificial intelligence into its iPhone 16 lineup as the trendsetting company tries to catch up with technology’s latest craze.

The upgrade to the iOS 18 operating system on Monday arrives more than a month after four iPhone 16 models equipped with the special computer chip needed to power the AI features went on sale at prices ranging from $800 to $1,200. Last year’s premium models — the iPhone 15 Pro and iPhone 15 Pro Max — also possess a processor that will enable the AI technology after the software update is installed.

Recent versions of Apple’s iPad and Mac computer also can be updated with the software.

Countries outside the U.S. won’t be getting the AI software for their iPhones until next year at a date still to be determined. Apple spent the past five weeks testing the AI software among an audience of iPhone owners who signed up to help the company fine-tune the technology.

The AI infusion is supposed to transform Apple’s often bumbling virtual assistant Siri into a more conversational, versatile, and colorful companion whose presence will be denoted by a glowing light that circles the iPhone’s screen as requests are being handled.

While Apple is promising Siri will be able to perform more tasks and be less prone to becoming confused, it won’t be able to interact with other apps installed on the iPhone until another software update comes out at a still-unspecified date.

Other AI features included in this software update will handle a variety of writing and proofreading tasks, and summarize the content of emails and other documents. The AI also will provide a variety of editing tools to alter the appearance of photos and make it easier to find old pictures.

Other AI tricks still to come in future software updates will include the ability to create customized emojis on the fly or conjure other fanciful imagery upon request. Apple also plans to eventually enable its AI suite to get a helping hand from OpenAI’s ChatGPT when users want it.

Most of the AI features Apple introduced Monday are already available on Android-powered smartphones that Samsung and Google released earlier this year.

To distinguish its approach from the early leaders in AI, the iPhone’s suite of new technology is being marketed as “Apple Intelligence.” Apple is also promising its AI features will do a far better job protecting the privacy of iPhone owners by either running the technology on the device or corralling in a fortress-like data center when some requests have to be processed remotely.

Because most iPhones currently in use around the world don’t have the computer chip needed for Apple’s AI, the technology is expected to drive huge demand for the new models during the holiday season and into next year, too. That’s the main reason why Apple’s stock price has soared 18% since Cupertino, California, previewed its AI strategy at a conference in early June. The run-up has increased Apple’s market value by about $500 billion, catapulting it closer to becoming the first U.S. company to be worth $4 trillion.

Apple will give investors their first glimpse at how the iPhone 16 is faring Thursday when the company posts quarterly financial information for the July-September quarter — a period that includes the first few days the new models were on sale.

Demand for the high-end iPhone 15 models ticked upward as prices for them fell and the excitement surrounding Apple’s entrance into the AI market ramped up, according to an assessment of the smartphone market during the most recent quarter by the research firm International Data Corp.

Apple’s iPhone shipments rose 3.5 percent from the same time last year to 56 million worldwide during the July-September period, second only to Samsung, according to IDC. The question now is whether Apple’s gradual release of more AI will cause owners of older iPhones to splurge on the new models during the holidays, “future-proofing their purchases for the long term,” said IDC analyst Nabila Popal.

Also on Monday, Apple said that with the software update some AirPods wireless headphones can be used as hearing aids.

An estimated 30 million people — 1 in 8 Americans over the age of 12 — have hearing loss in both ears. Millions would benefit from hearing aids but most have never tried them, according to the National Institute on Deafness and Other Communication Disorders.

However, with today’s OS updates, that has begun to change. Each update released today includes a far deeper set of new features than any other ‘.1’ release I can remember. Not only are the releases stuffed with a suite of artificial intelligence tools that Apple collectively refers to as Apple Intelligence, but there are a bunch of other new features that Niléane has written about, too.

The company is tackling AI in a unique and very Apple way that goes beyond just the marketing name the features have been given. As users have come to expect, Apple is taking an integrated approach. You don’t have to use a chatbot to do everything from proofreading text to summarizing articles; instead, Apple Intelligence is sprinkled throughout Apple’s OSes and system apps in ways that make them convenient to use with existing workflows.

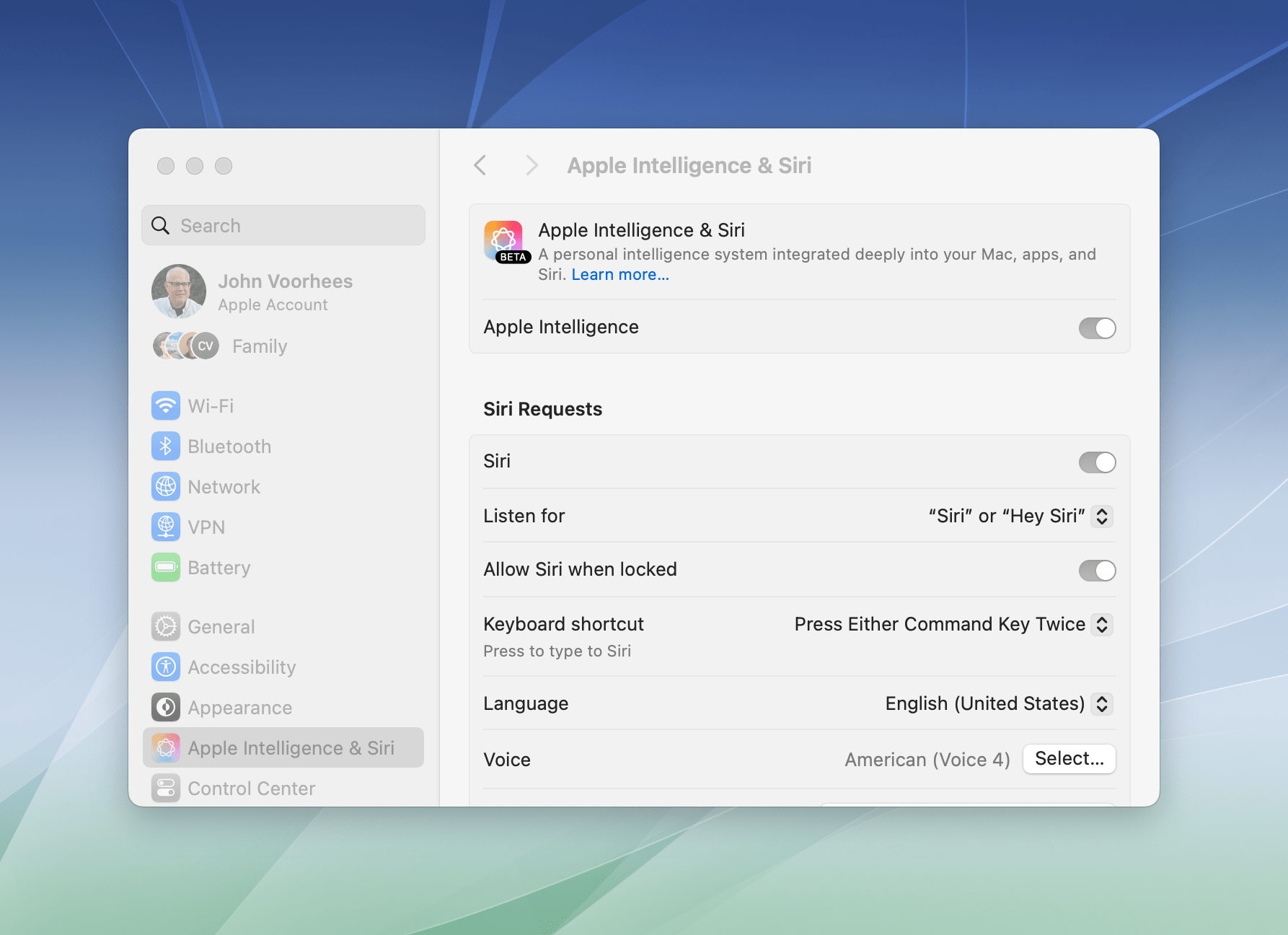

If you don’t want to use Apple Intelligence, you can turn it off with a single toggle in each OS’s settings.

Apple also recognizes that not everyone is a fan of AI tools, so they’re just as easy to ignore or turn off completely from System Settings on a Mac or Settings on an iPhone or iPad. Users are in control of the experience and their data, which is refreshing since that’s far from given in the broader AI industry.

The Apple Intelligence features themselves are a decidedly mixed bag, though. Some I like, but others don’t work very well or aren’t especially useful. To be fair, Apple has said that Apple Intelligence is a beta feature. This isn’t the first time that the company has given a feature the “beta” label even after it’s been released widely and is no longer part of the official developer or public beta programs. However, it’s still an unusual move and seems to reveal the pressure Apple is under to demonstrate its AI bona fides. Whatever the reasons behind the release, there’s no escaping the fact that most of the Apple Intelligence features we see today feel unfinished and unpolished, while others remain months away from release.

Still, it’s very early days for Apple Intelligence. These features will eventually graduate from betas to final products, and along the way, I expect they’ll improve. They may not be perfect, but what is certain from the extent of today’s releases and what has already been previewed in the developer beta of iOS 18.2, iPadOS 18.2, and macOS 15.2 is that Apple Intelligence is going to be a major component of Apple’s OSes going forward, so let’s look at what’s available today, what works, and what needs more attention.

Siri

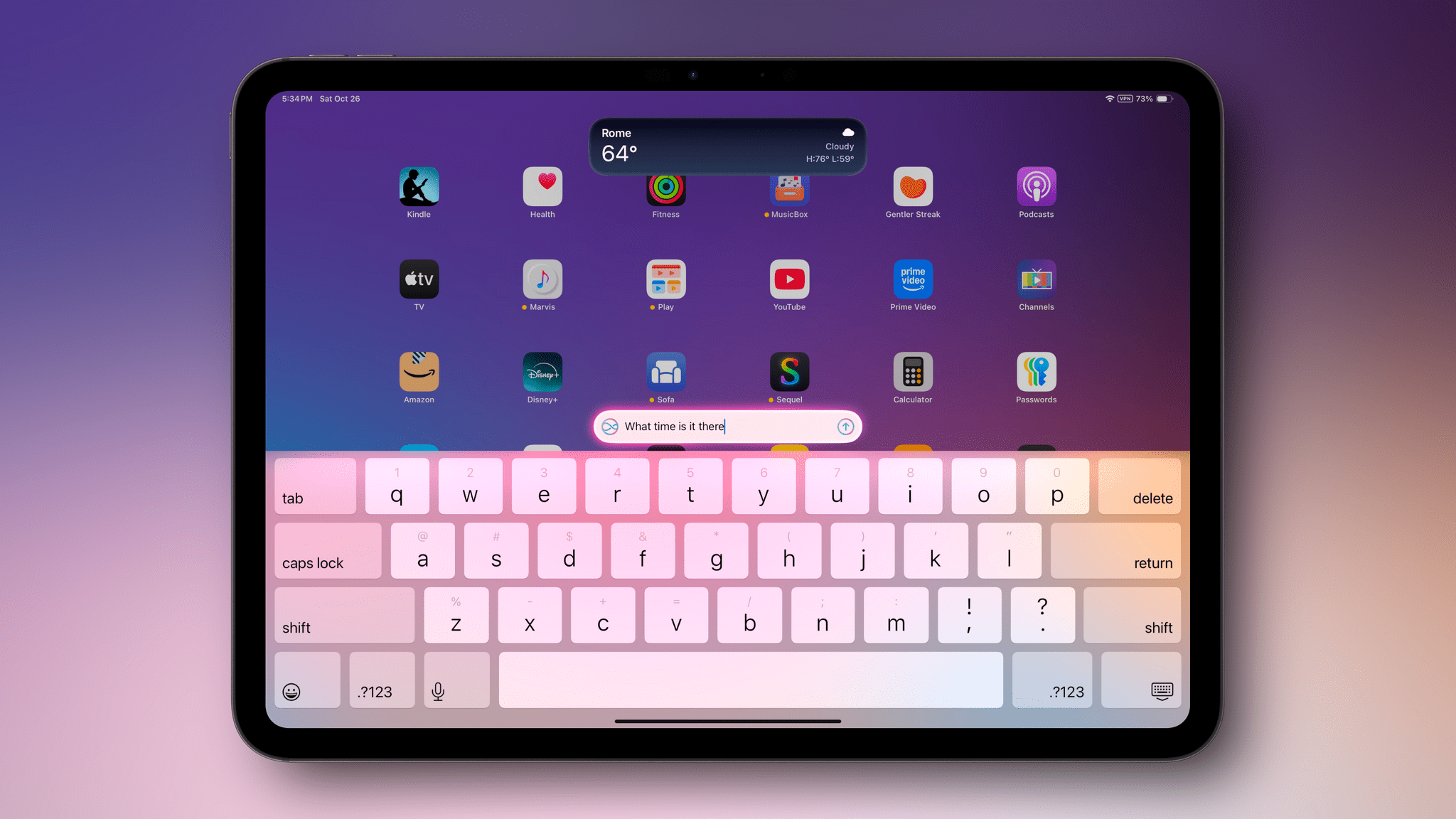

Siri announces itself with a flourish in Apple’s latest OS updates. Trigger it on your iPhone, for example, and a shimmering, iridescent glow animates around the edges of your screen as long as the assistant is active. Similar animations play on the iPad and Mac, too. It’s a very cool effect that sets high expectations Siri can’t live up to because many of the features Apple has planned for it won’t be out for months to come.

Down the road, the company says you’ll be able to ask the assistant to perform tasks in apps on your behalf using the technology underlying Shortcuts. Apple also promises that Siri will understand your personal context. If Apple can pull that off, Siri will be leagues ahead of where it is today, but it’s not there yet.

Still, there is more to Siri in today’s updates than just a fancy animation. It may not understand your personal context yet, but Siri does have contextual awareness in the sense that it can now follow the meaning of multiple requests made one after the other. Here’s a simple example:

Me: “What time is it in Rome?”

Siri: “1:20 AM.” (Correct.)Me: “What’s the weather there?”

Siri: “62°F.” (Also correct)Me: “How do I get there?”

Siri: Opens Maps to show me where I am and where Rome, Italy is, as well as to let me know Maps can’t do overseas directions.

That sort of interaction is handy. I didn’t have to repeat “Rome” every time I spoke, and even though personal context is not incorporated into Siri yet, the assistant responded (perhaps coincidentally) with information for Rome, Italy, which is far more relevant to me personally than a town like Rome, Georgia – even though Georgia is far closer to my home.

Siri also does a better job than before of understanding if you trip over your words and correct yourself. That means if you ask about the weather in Philadelphia and, halfway through speaking your request, you say, “No, I mean Baltimore,” Siri will understand and give you the weather conditions in Baltimore. It’s a small change that will make a big difference if you’re like me and find yourself self-editing requests aloud.

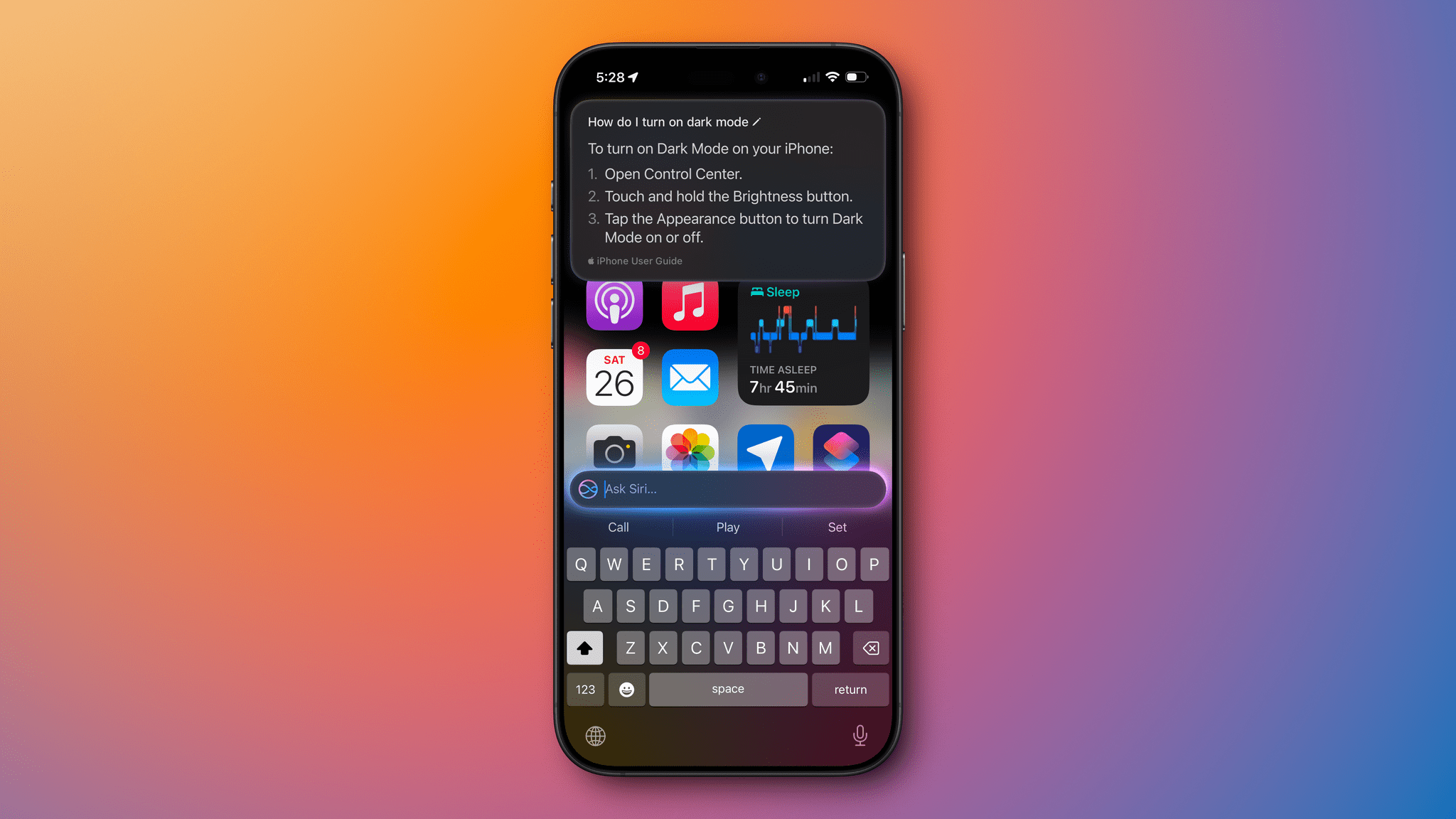

Siri also now supports documentation for Apple products. If you ask how to turn on Dark Mode, for example, you’ll get a simple list of instructions pulled from the iPhone User Guide. I don’t foresee myself using this much, but it’s easier than opening Safari and typing a search, even if the same information is often the first result of a Google search.

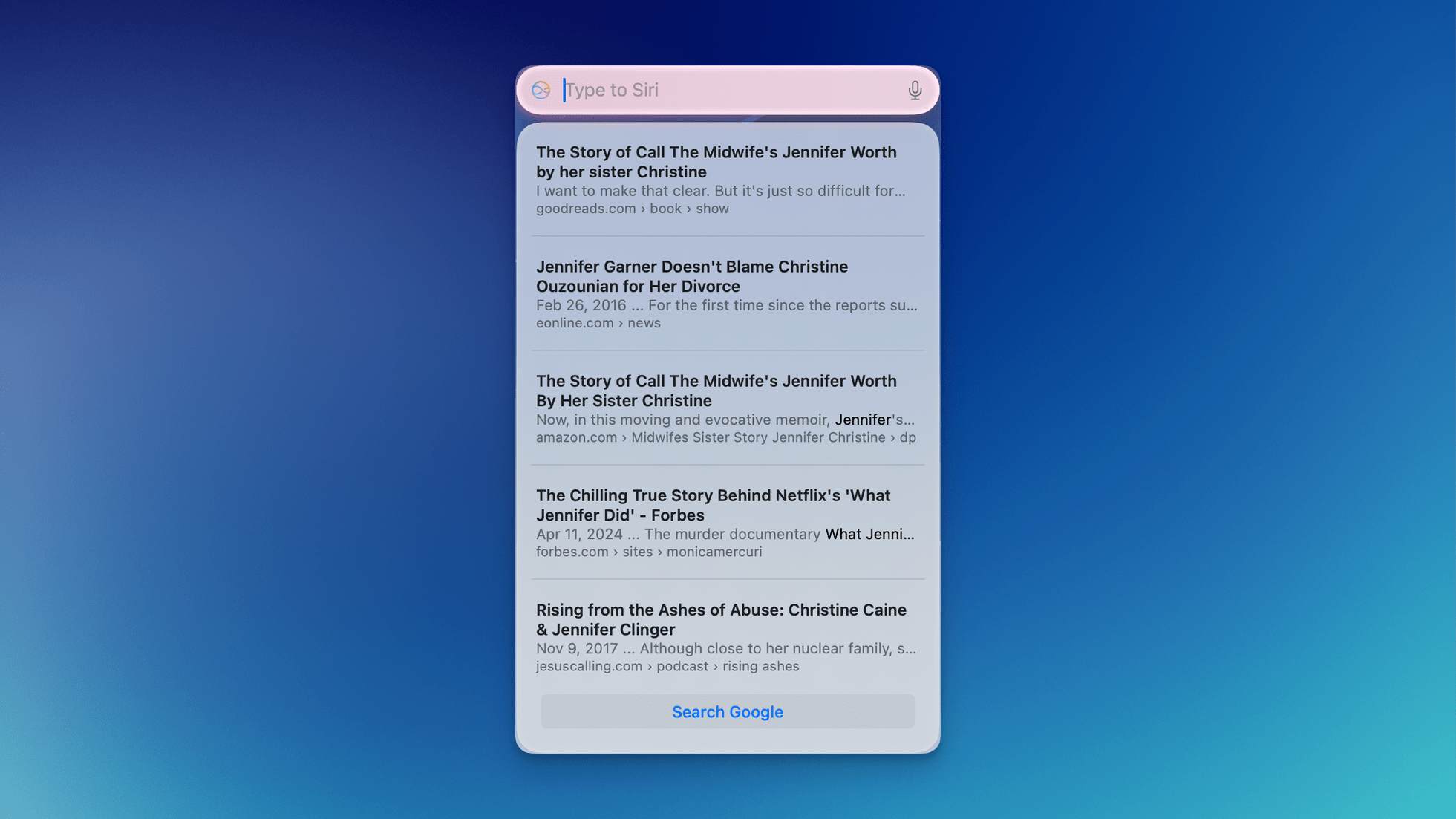

Perhaps the biggest change of all is the new ability to type requests to Siri. The assistant defaults to verbal requests in most cases, but you can tap a result on the iPhone or iPad to edit it and even use a completely different query if you want. You can also double-tap on the bottom of an iPhone or iPad’s screen, use Globe + S on any compatible keyboard, or enable a double-tap on the Command button on your Mac’s keyboard to activate Siri. On the Mac, you can also drag the Siri text field anywhere onscreen, where it will sit pinned above your other windows, ready to take requests by voice or typing at any time.

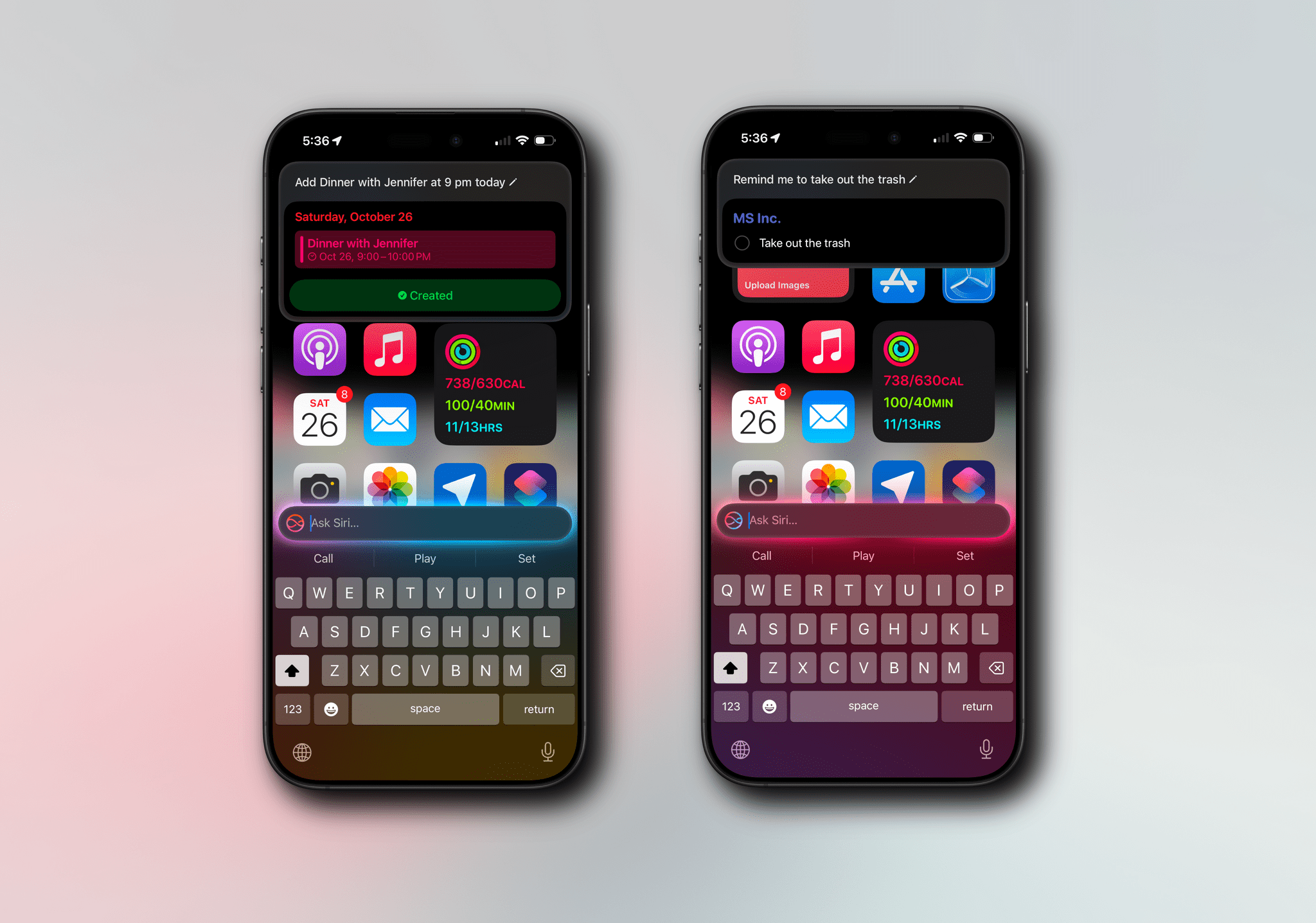

Of all the changes to Siri in today’s updates, Type to Siri is by far the most useful. On my Mac, I can leave it open at all times and, with a double-tap of the Command key, add a task to Reminders or an event to Calendar. It solves the problem of neither app having a global hotkey for quick entry. I’m not sure what other uses I’ll find for Type to Siri in the future, but for now, jotting down tasks or events as they come to me is plenty.

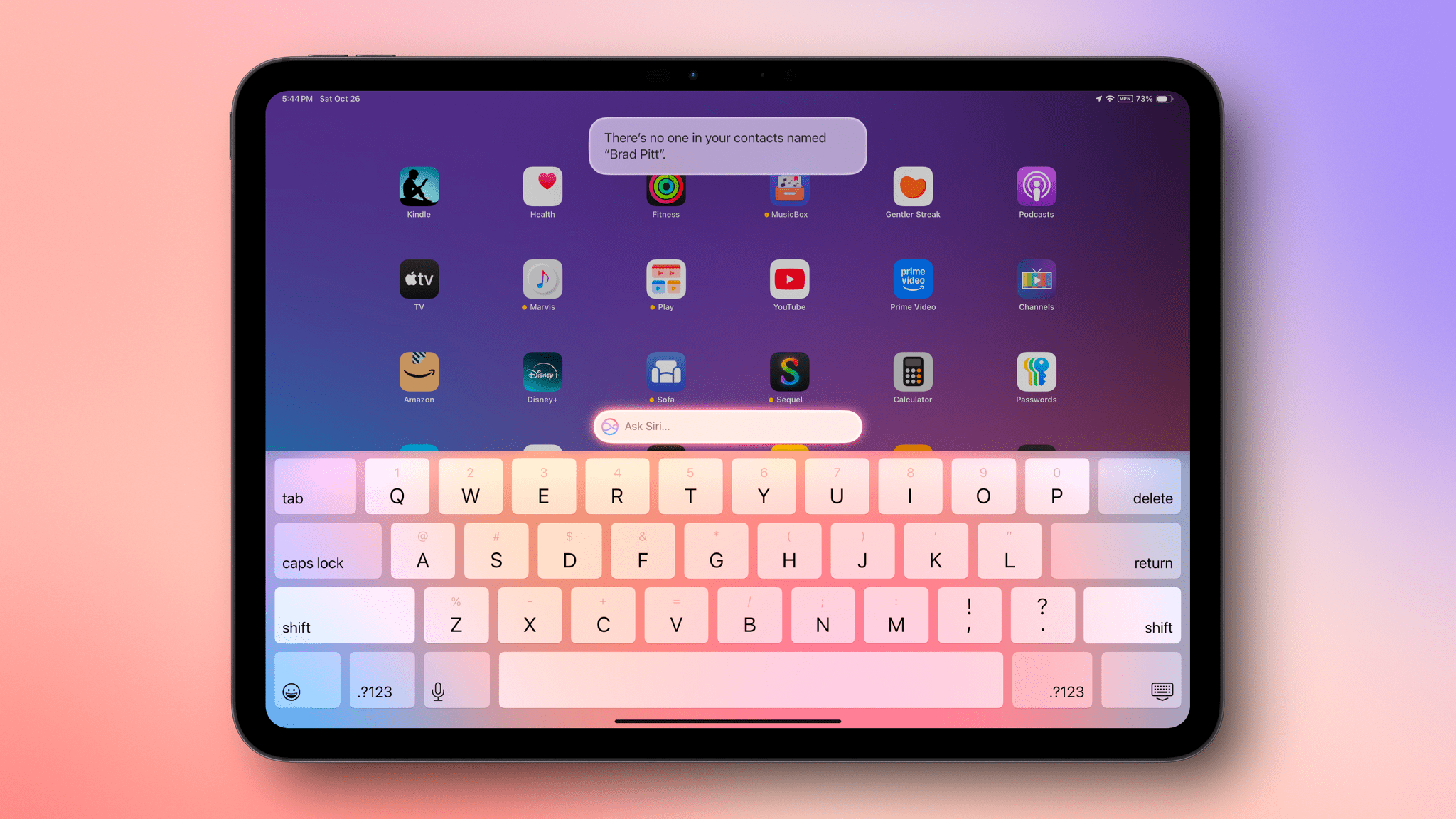

So far so good, but there’s one big caveat when it comes to Siri: it’s the same old Siri that isn’t any smarter than it was before today’s updates. It can pull information out of apps like Contacts, Calendar, and Messages, which isn’t new, but the results remain spotty at best. I can reliably get Siri to find a phone number or email address from Contacts and show me my schedule, but it often gets stuck in a particular mode, which leads to incorrect or unhelpful results.

For example, if I’ve asked about people in my contacts and then ask, “Who is Brad Pitt?” Siri is likely to tell me that there’s no one by that name in my contacts. Fair enough, but that’s not what I meant. I wanted information on the actor instead. If, however, I follow a question about someone in my contacts with a request for the weather forecast and then ask who Brad Pitt is, I’ll get a short bio of the actor.

I’ve seen the same sort of behavior in Messages, too. If I’ve asked a question that requires a web result and then ask Siri something like, “What did Jennifer tell me about Christine yesterday?” I’ll get Google results, including an obituary for someone named Jennifer Christine.

If I follow that with, “Who is Jennifer?” Siri will open my wife’s contact card. Then when I follow up by repeating my question, “What did Jennifer tell me about Christine yesterday?” Siri will read an excerpt from my iMessages with Jennifer containing the information I wanted to remember. The order of requests shouldn’t matter, but it does because Siri seems to get stuck in a particular mode and doesn’t always handle switching to another gracefully.

Siri is good at showing you your schedule for a particular day, too. However, it struggles to find individual events unless you phrase them exactly as they’re written in your calendar, and even that rarely works. For example, I asked Siri when Michael’s birthday is. I figured it would tell me about my nephew Michael’s birthday, which is on my calendar a few days from now. Instead, it told me that Michael Jackson was born on August 29th, 1958. I guess there’s just One True Michael.

.](https://cdn.macstories.net/apple-intelligence-more-personal-siri-iphone-16-pro-00-00-12-304-1729979744561.png)

Apple’s marketing promises results Siri can’t yet deliver. Source: Apple.

Siri’s data retrieval struggles aside, it’s still good at setting timers, including multiple timers. It will find people in the Contacts app, tell you the time and weather, provide driving directions, and search the web. However, Siri could already do those things. Its ability to understand multiple requests made in a row, respond better when you trip over your own words, and accept typed requests are all meaningful improvements to the feature, but its core “intelligence” is still a problem.

To be fair, Apple has said additional Siri “smarts” are coming later. If so, why enable new interaction modes now? Siri’s new features are welcome, but the UI and Apple’s marketing of Apple Intelligence have written a check that Siri can’t cash yet. I appreciate the new features; however, what I really want is personal contextual awareness and App Intents-powered automations.

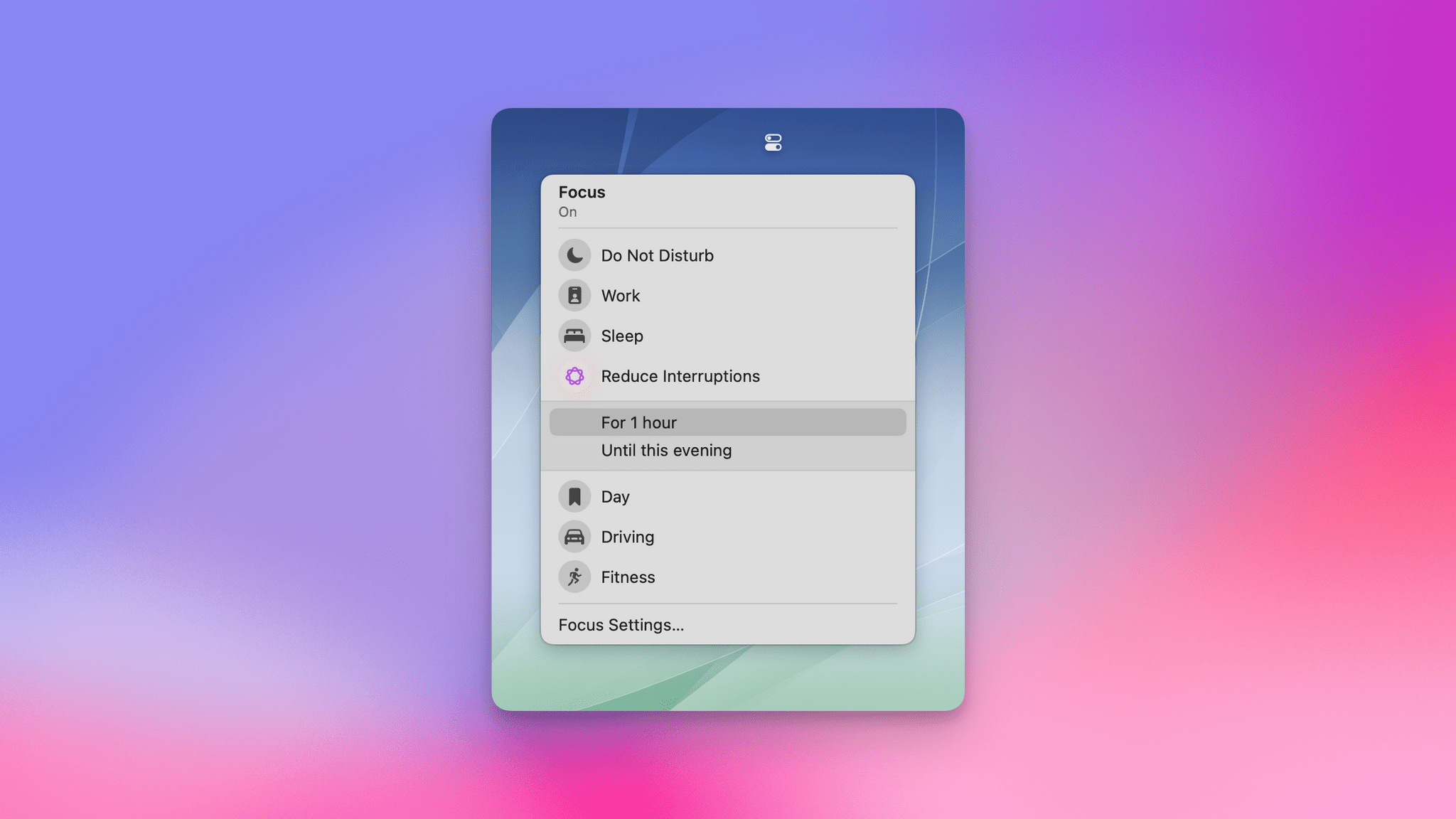

Reduce Interruptions

As I’ve been writing this review, I’ve turned to Apple Intelligence’s new Reduce Interruptions Focus mode multiple times. It’s similar to Do Not Disturb, but it still lets through some notifications that it deems important, which is a key difference.

In my experience, Reduce Interruptions works well. Today as I was writing, a call came through from my wife, and I saw a notification of a text message from Federico, neither of which I’d want to be blocked. What didn’t get through were messages from Discord where some MacStories team members were chatting about iOS 18.2.

Now, I could have (and have before) created Focus modes with elaborate rules about which people and apps can break through while I’m working, but with Reduce Interruptions, I’ve been able to dispense with those other Focus modes and simply turn it on when I need some “mostly quiet” time. There’s also a new ‘Intelligent Breakthrough & Silencing’ toggle in Focus mode settings that allows you to apply a similar effect to any Focus mode. So far, though, Reduce Interruptions has been all I’ve really needed. Two thumbs up, would enable again.

Photos

There are three Apple Intelligence features available in the Photos app:

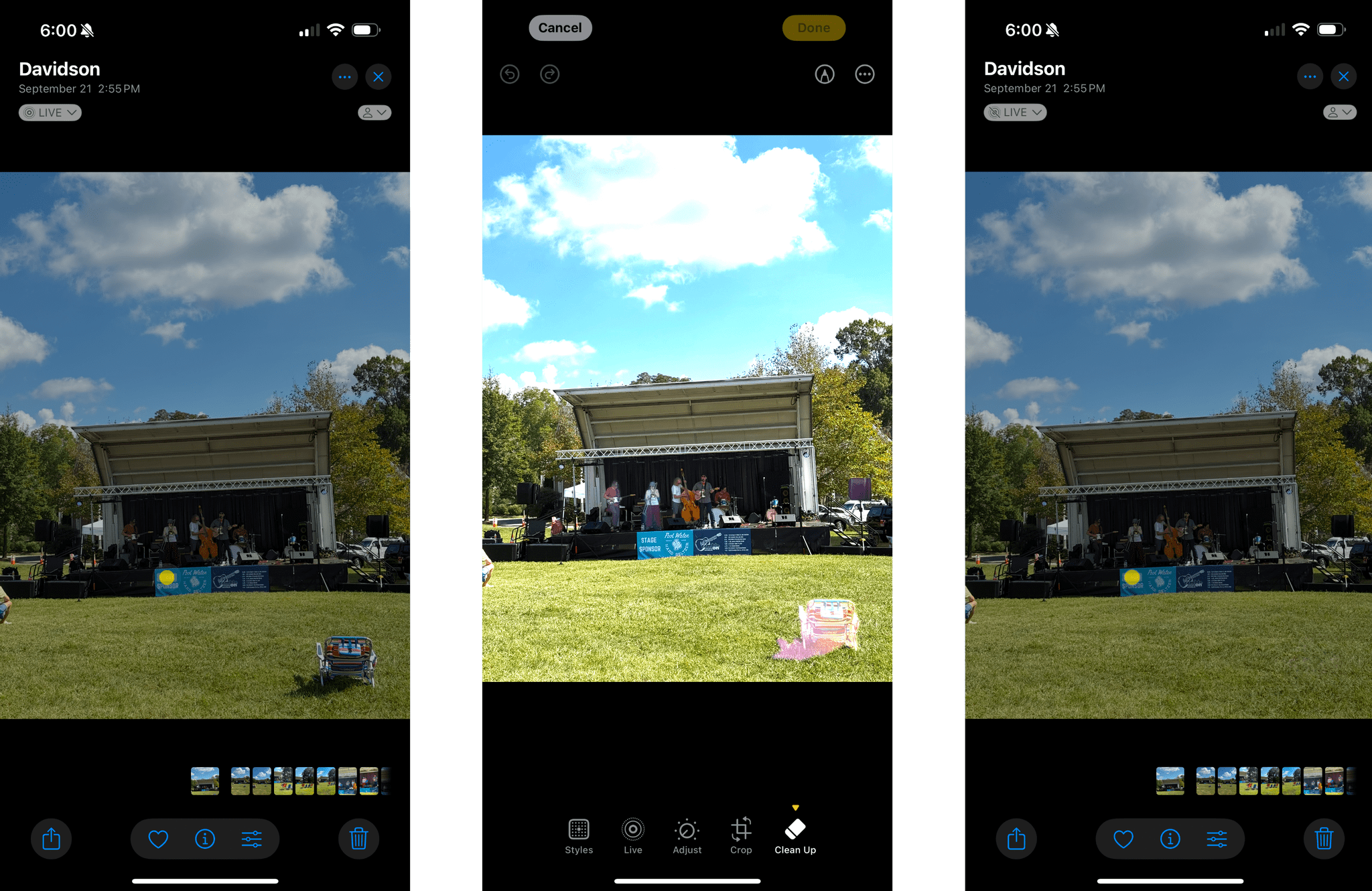

- Clean Up

- Memory Movie Creation

- Improved search

Like similar tools I’ve used for years, Clean Up lets you tap, circle, or paint over an item in a photo with your finger to remove it. My first test was to erase a lawn chair from a grassy field. Clean Up did an excellent job, replacing the brightly colored chair with grass. If I zoomed in closely, I could tell that the grass was different from the area surrounding the edit, but if I were flicking through my photos and didn’t realize that one had been edited, I doubt I would have noticed. However, I do appreciate that the feature adds an ‘Edited with Clean Up’ message in the photo’s metadata and that it’s non-destructive, meaning it can be reversed at any time. In my experience, Clean Up works best with image elements that have clearly defined outlines with good lighting and high contrast.

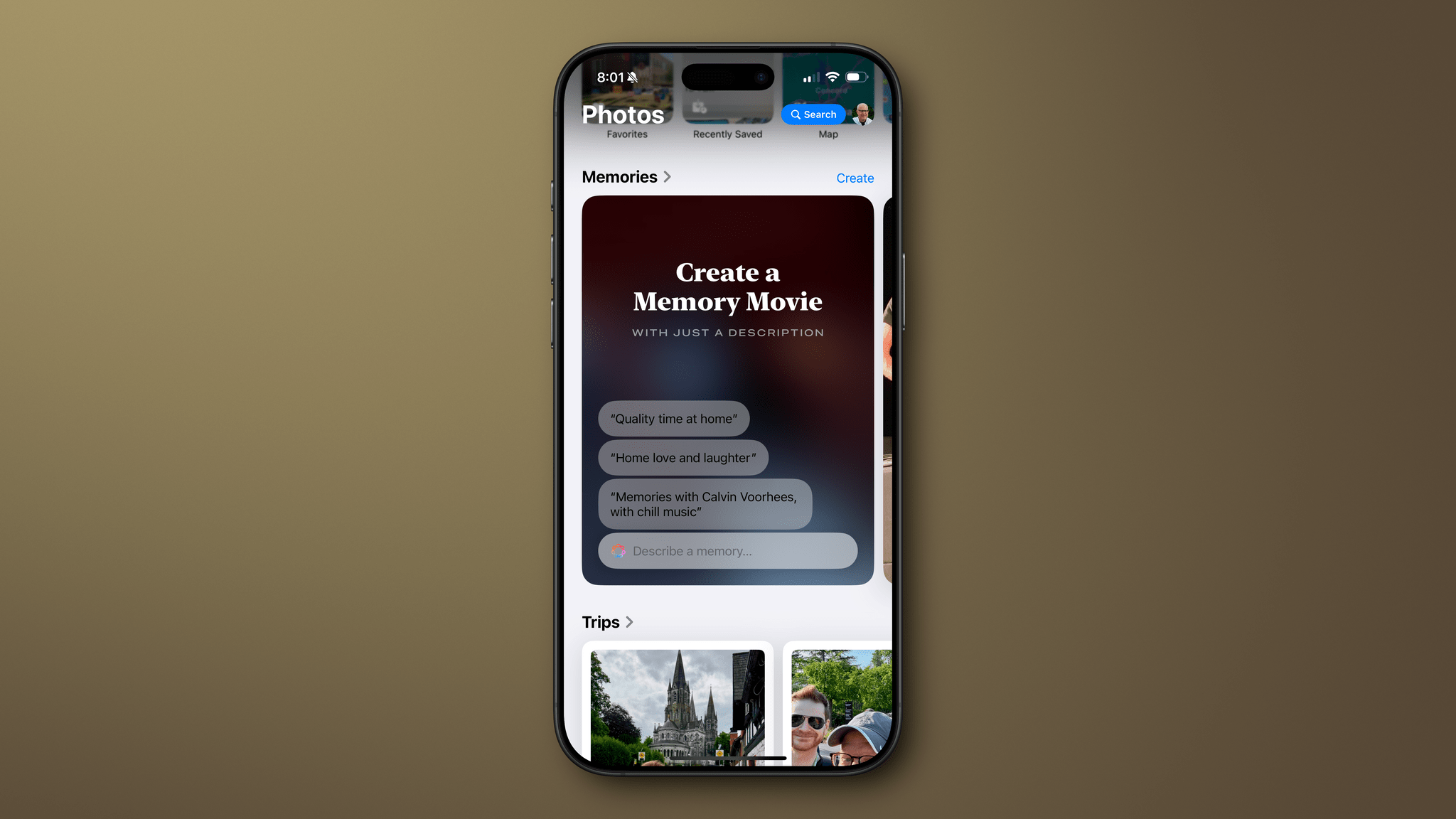

The second feature, which is only available on the iPhone and iPad, is the ability to create Memory Movies based on text prompts. I’m sure a lot of people will want to try this as soon as they install iOS or iPadOS 18.1, but you’ll have to be patient if you have a big photo library. Apple Intelligence processes photos when your device is connected to power, and depending on how many photos you have, it can take days. I installed iOS 18.1 on my new iPhone 16 Pro Max on Friday, September 20, and it was still processing photos on Sunday afternoon. I’m not sure when it finished, but it eventually did.

My results with Memory Movies have been mixed. Using “At the coffee shop” as a prompt was just as likely to include a photo of a bar or restaurant as it was a coffee shop. The feature also has a short memory when it comes to concepts like your home. I picked one of Apple’s suggested prompts, “Home love and laughter,” and the results were all photos taken where I live now; there wasn’t a single picture from the decades I lived in Illinois. Still, the time saved is worth it because it’s easier to generate a Memory Movie that mostly works and edit it than it is to start from scratch.

I don’t have a lot to say about Photos search, which now supports natural language and can find frames inside of videos, too. I’ve always thought search worked reasonably well in Photos, and it still does. It seems to handle a wider range of requests that don’t need to be as tightly defined as before, though, which is welcome.

Overall, Photos’ Clean Up feature works well. We can debate whether erasing people and other things from pictures is a good thing because it alters the reality of what was captured. In my opinion, it’s wild and a little disconcerting to completely erase someone you know from one of your photos as opposed to someone in the background who photobombed your family portrait. But in practice, I expect Clean Up will primarily be used to remove smaller distractions in a way that will improve the images without distorting reality in a meaningful way.

Improved search and simpler Memory Movie creation are examples of artificial intelligence implementations that show off how it can make it easier to access and enjoy large collections of information. With so many people carrying tens of thousands of images on their iPhones and other devices, leveraging AI to make them more accessible is a win, although it’s disappointing that Memory Movies generation isn’t available on the Mac, too.

Writing Tools

I’m not the target audience for most of the Apple Intelligence Writing Tools. I write for a living, and I’m not interested in having AI do it for me. That said, I expect that Writing Tools have the potential to be some of the most popular of Apple’s AI features, but they need to improve to really take off.

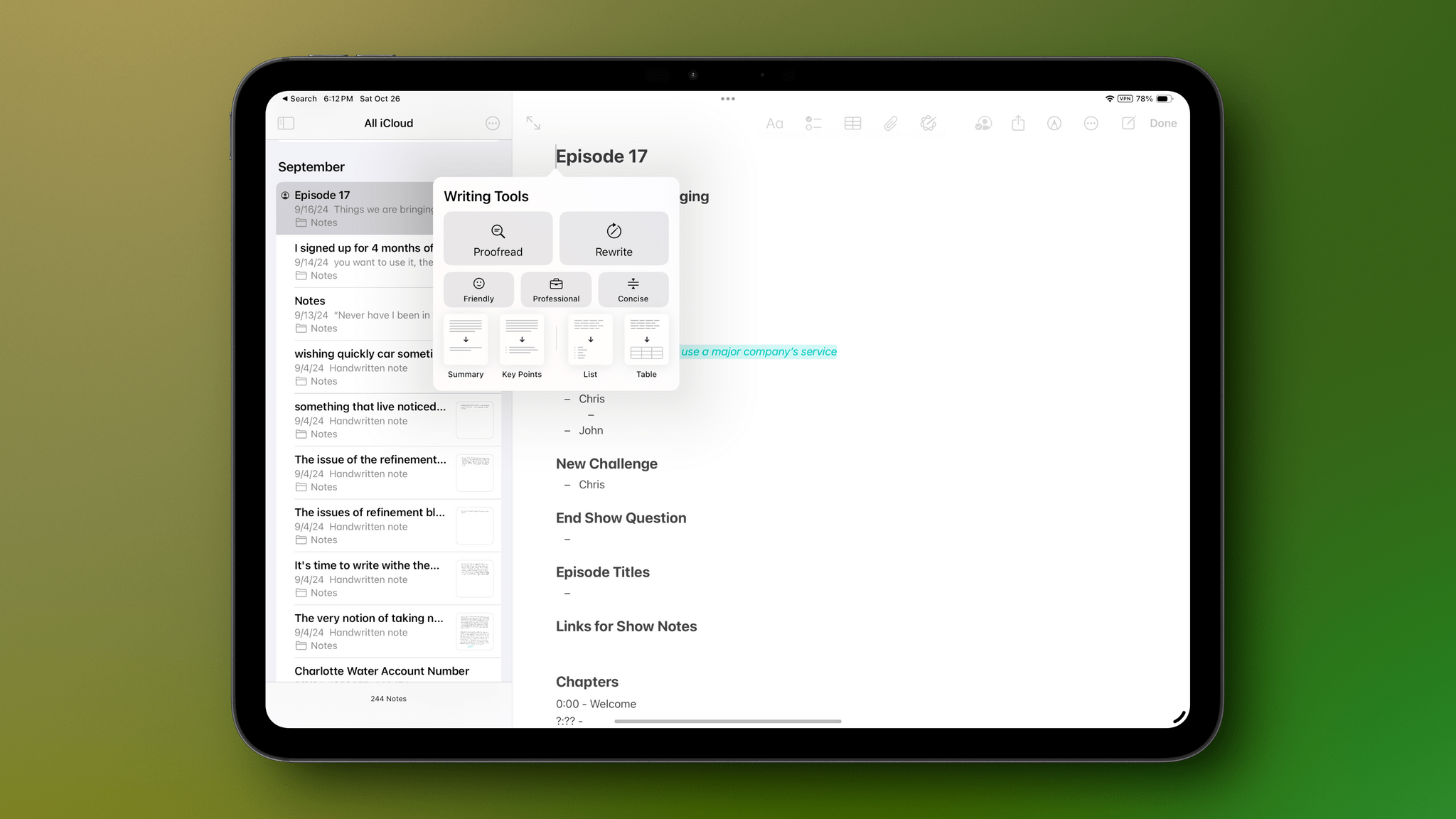

Writing Tools are found throughout Apple’s OSes. Practically anywhere you can type or select text, you can access Writing Tools. On iOS and iPadOS, you’ll find them in the context menu and, often, the toolbar above the virtual keyboard. On macOS, a little Apple Intelligence icon appears when you select text, possibly portending a more fully-featured PopClip-like context menu in a future version of macOS. Writing Tools can also be accessed from the right-click menu and from the Edit menu when you’re using an app with a text field on the Mac.

One result of these changes is that the iOS and iPadOS context menus are getting very crowded. ‘Writing Tools‘ is sometimes the second option after ‘Copy,’ pushing options like ‘Search Web’ much further down the line of as many as four context menu segments. I hope in the future that Apple gives users the ability to customize the context menu to prioritize the tools they use most, because I know for me, the menu items I rely on most are scattered across the entire menu.

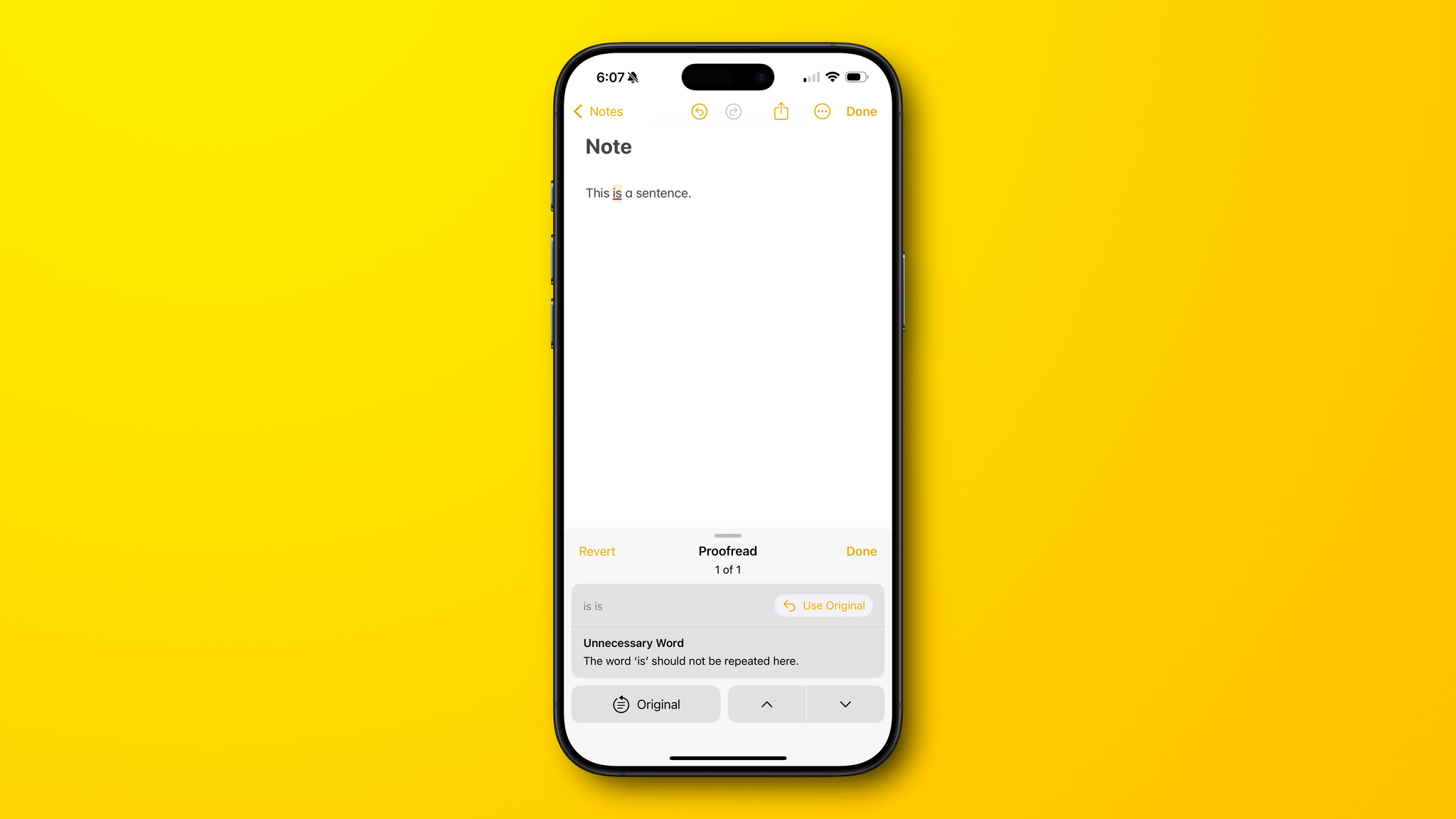

Where Writing Tools are fully integrated into an app, you can access an explanation of each suggested change.

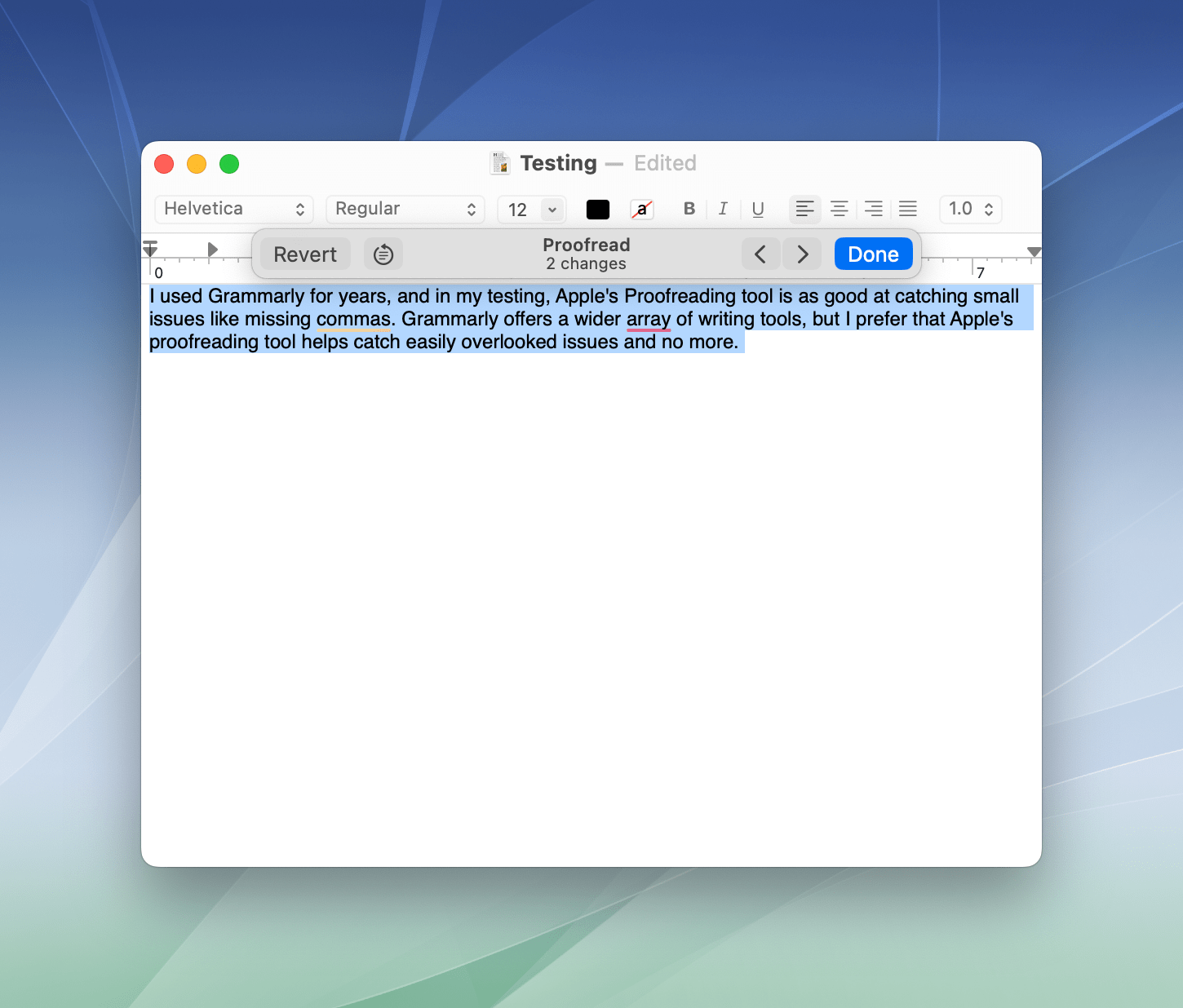

Of the Writing Tools, the one I like most is Proofreading. I’ve used Grammarly for years and appreciate its ability to catch easily overlooked errors like missing commas. Apple’s Proofreading Writing Tool is good at that, too, but the experience is different depending on the app you’re using.

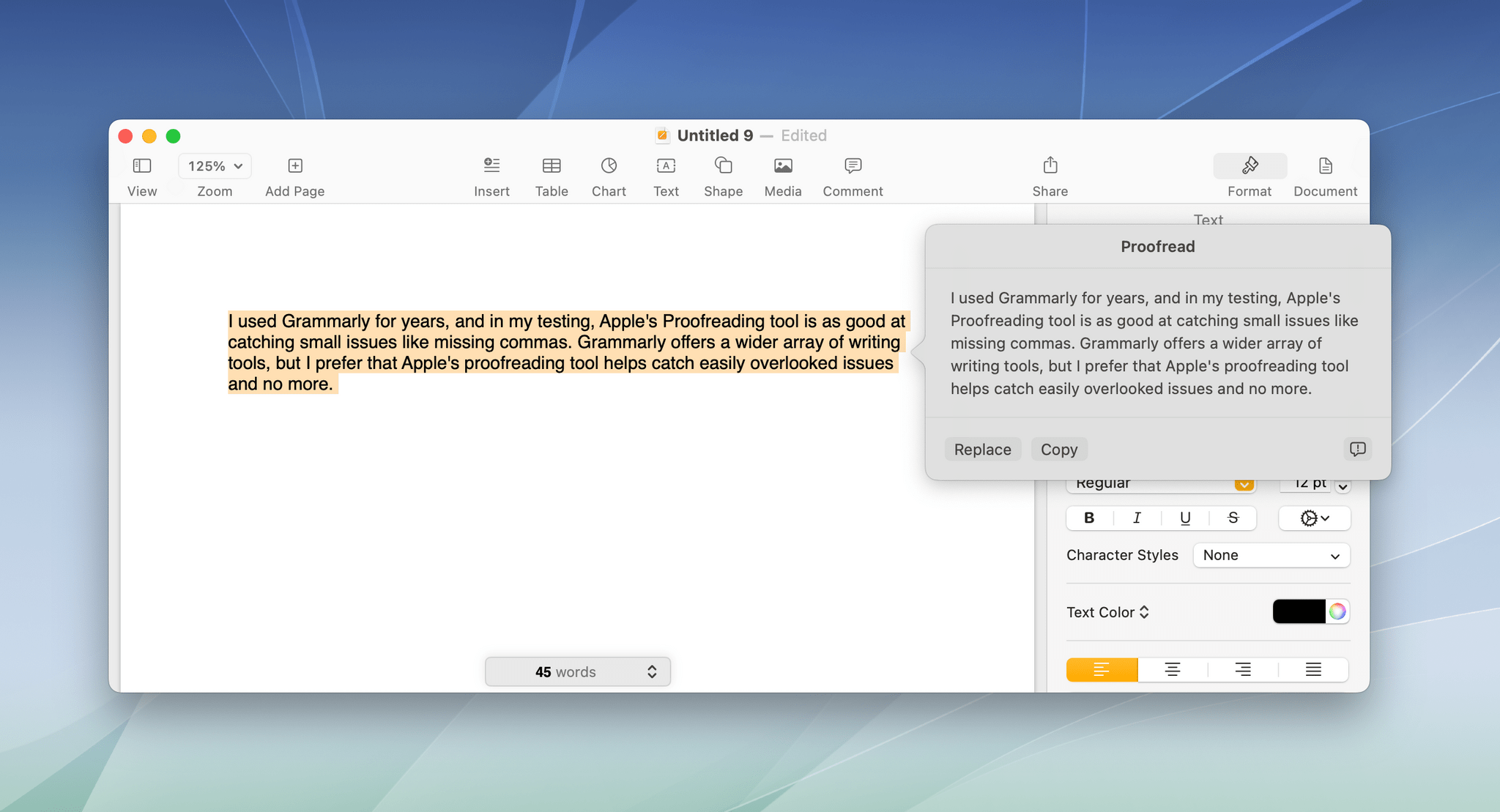

With some apps, like TextEdit on the Mac and Notes on any platform, Proofreading suggests changes that are highlighted so you can step through them, read why each change is suggested, and reject suggestions if you want, which is great. However, other apps like Pages don’t highlight suggested changes or allow you to reject individual suggestions at all, regardless of the platform you’re on. That’s a bad experience if you’re trying to proofread anything longer than a single sentence because it’s just too hard to scan for the changes without highlighting, to say nothing of the inability to reject individual changes.

The difference seems to come down to whether an app integrates with the Writing Tools APIs or is simply using the system-wide version of Writing Tools. You can tell the difference by looking for an Apple Intelligence icon that appears in an app’s toolbar or when you select text on a Mac. If you don’t see the icon in either place, the app doesn’t seem to integrate with the Writing Tool APIs.

Pages has access to a more rudimentary version of Proofreading, but it has its own built-in spelling and grammar tools as well.

Pages already has its own tools for checking spelling and grammar, so perhaps Apple Intelligence’s Proofreading tool won’t be added to it. However, I could see that changing over time as Proofreading becomes more robust and refined.

Regardless of what the future holds for Pages, the difference in the way Proofreading works in the app highlights something important: it means that any third-party app that doesn’t integrate directly with Writing Tools will have access to the inferior version of Proofreading, which is a shame. However, with the release of the developer betas for iOS and iPadOS 18.2 and macOS 15.2, Apple gave developers access to a new Writing Tools API, so hopefully the wait for full Proofreading integration in third-party apps won’t be too long.

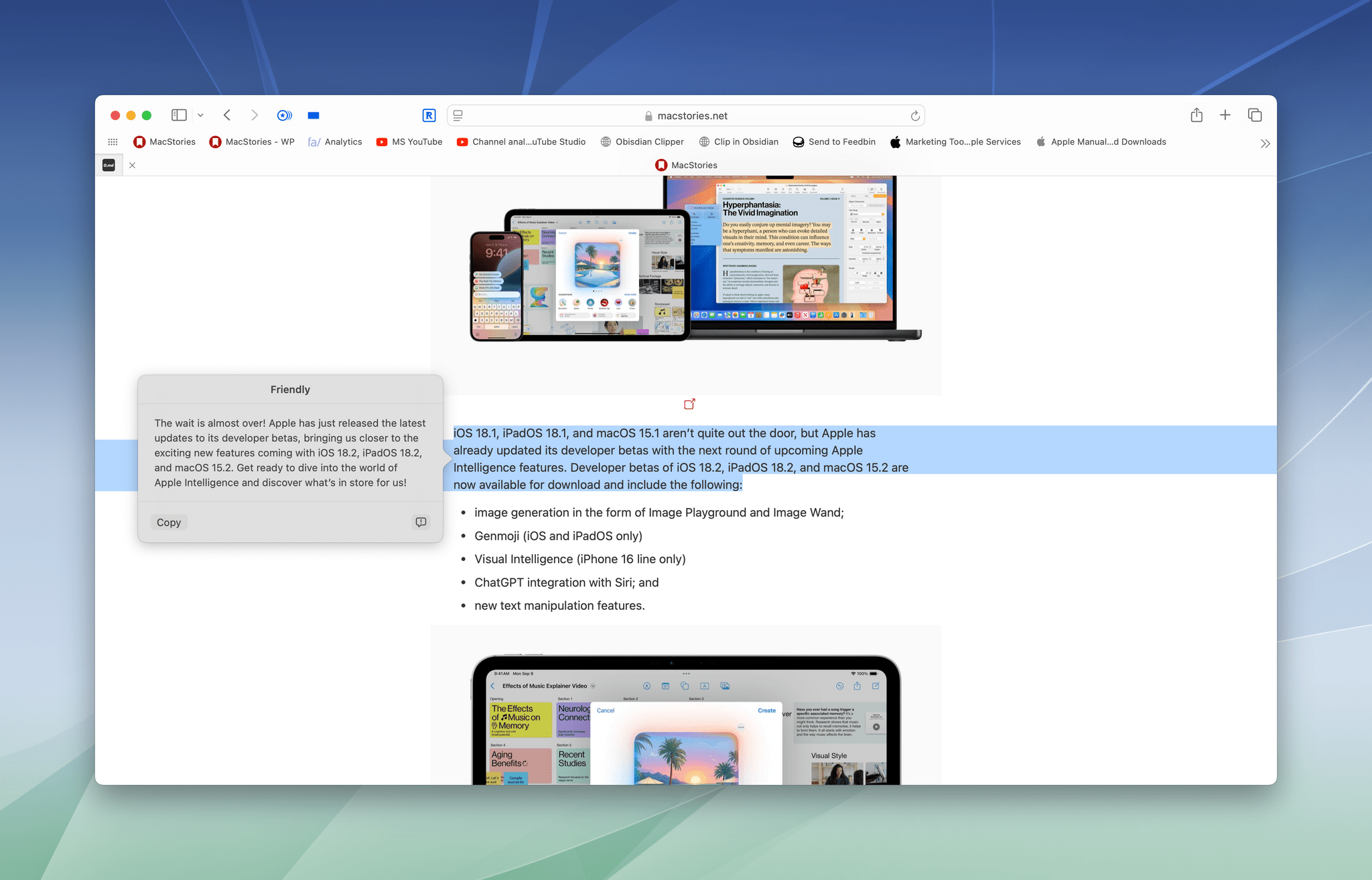

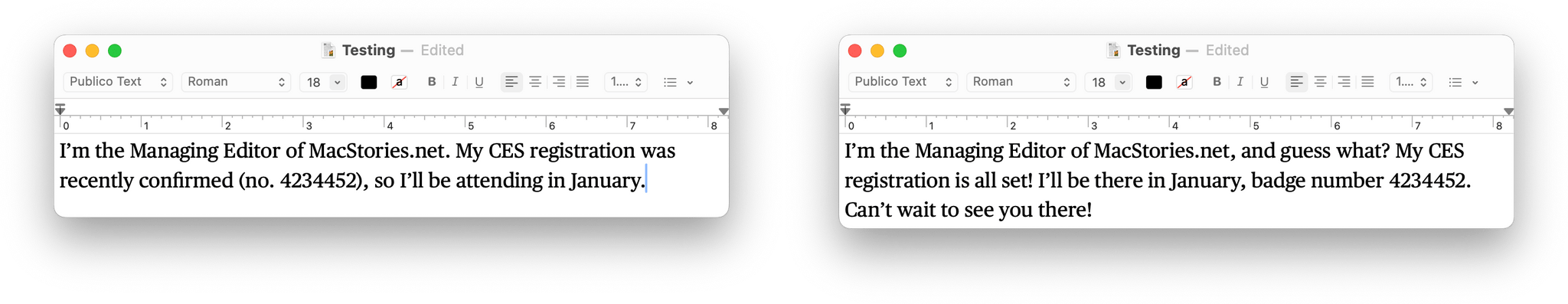

The rewriting tools’ results aren’t terrible, but they’re often just a little too over the top. What I wrote (left) and Friendly (right).

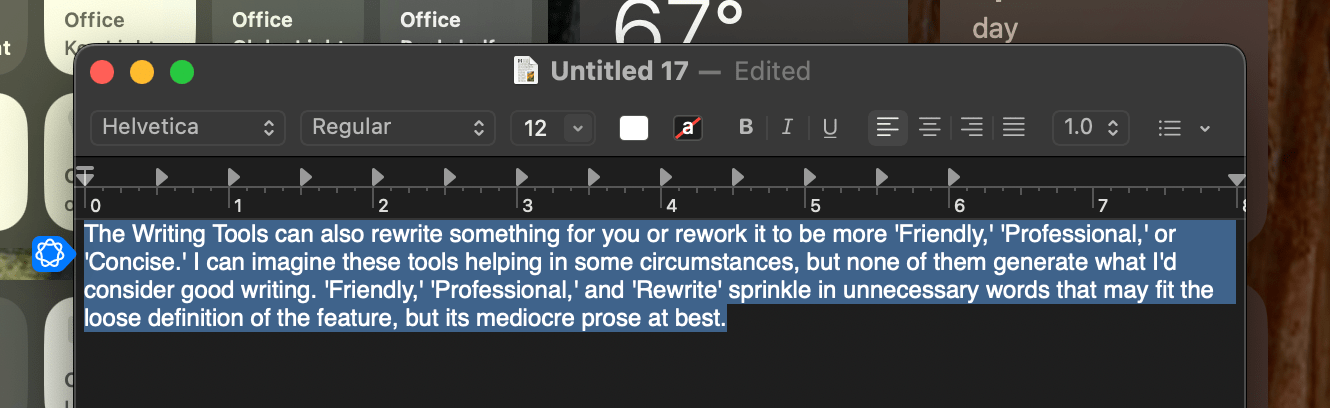

Writing Tools can also rewrite something for you or rework it to be more Friendly, Professional, or Concise. I can imagine these tools helping in some circumstances, but none of them generate what I’d consider good writing. Friendly, Professional, and Rewrite sprinkle in unnecessary words that may fit the definition of the feature, but the results are wordy and unnatural. Hopefully, with time, Apple will find a happy middle ground between the intended style and a more neutral, natural-sounding tone.

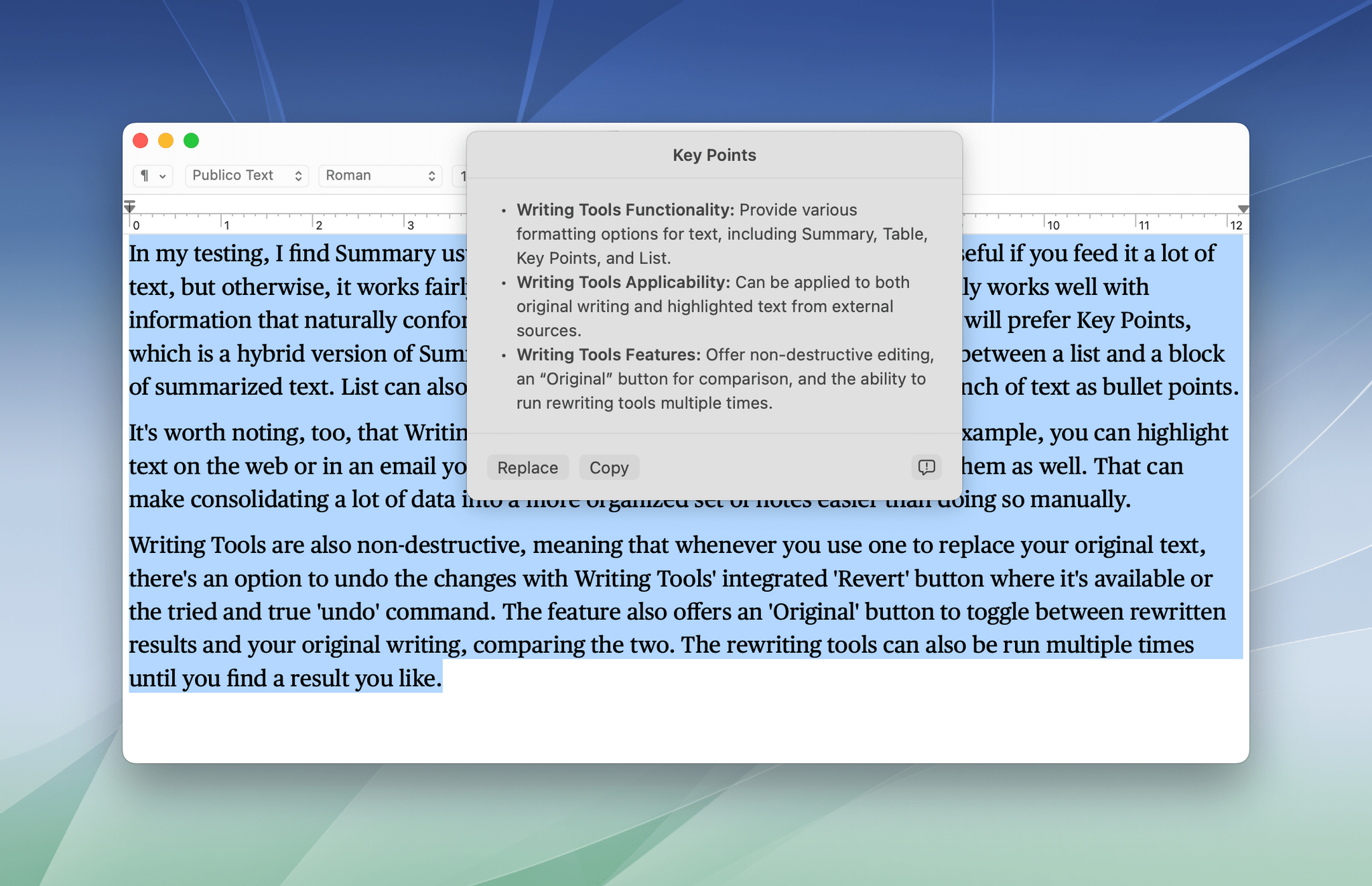

The Writing Tools menu also includes buttons to create multiple forms of lists and summaries:

- Summary

- Key Points

- List

- Table

In my testing, I find Summary usually generates results that are too short to be useful if you feed it a lot of text, but otherwise, it works fairly well. A table is a niche form of summary that only works well with information that naturally conforms to rows and columns. I suspect most people will prefer Key Points, which is a hybrid version of Summary and List that strikes a nice middle ground between a list and a block of summarized text. A list can also be handy when you simply want to format a bunch of text as bullet points.

It’s worth noting, too, that Writing Tools aren’t limited to your own writing. For example, you can highlight text on the web or in an email you receive and apply any of the Writing Tools to it as well. That can make consolidating a lot of data into a more organized set of notes easier than doing so manually.

Writing Tools are also non-destructive, meaning that whenever you use one to replace your original text, there’s an option to undo the changes with Writing Tools’ integrated ‘Revert’ button where it’s available or the tried-and-true ‘undo’ command. The feature offers an ‘Original’ button to toggle between rewritten results and your original writing, comparing the two. The rewriting tools can also be run multiple times until you find a result you like.

As things stand today, Writing Tools are good for proofreading if the app you’re using includes direct Writing Tools integration. The Key Points and List features are also useful for bringing together research materials. However, tools for rewriting text are fine for low-stakes uses like dashing off a quick email message, but they’re too rough for writing a cover letter for a job or an important memo for work. Over time, that may change, but for now, I’d use the rewriting tools cautiously and sparingly.

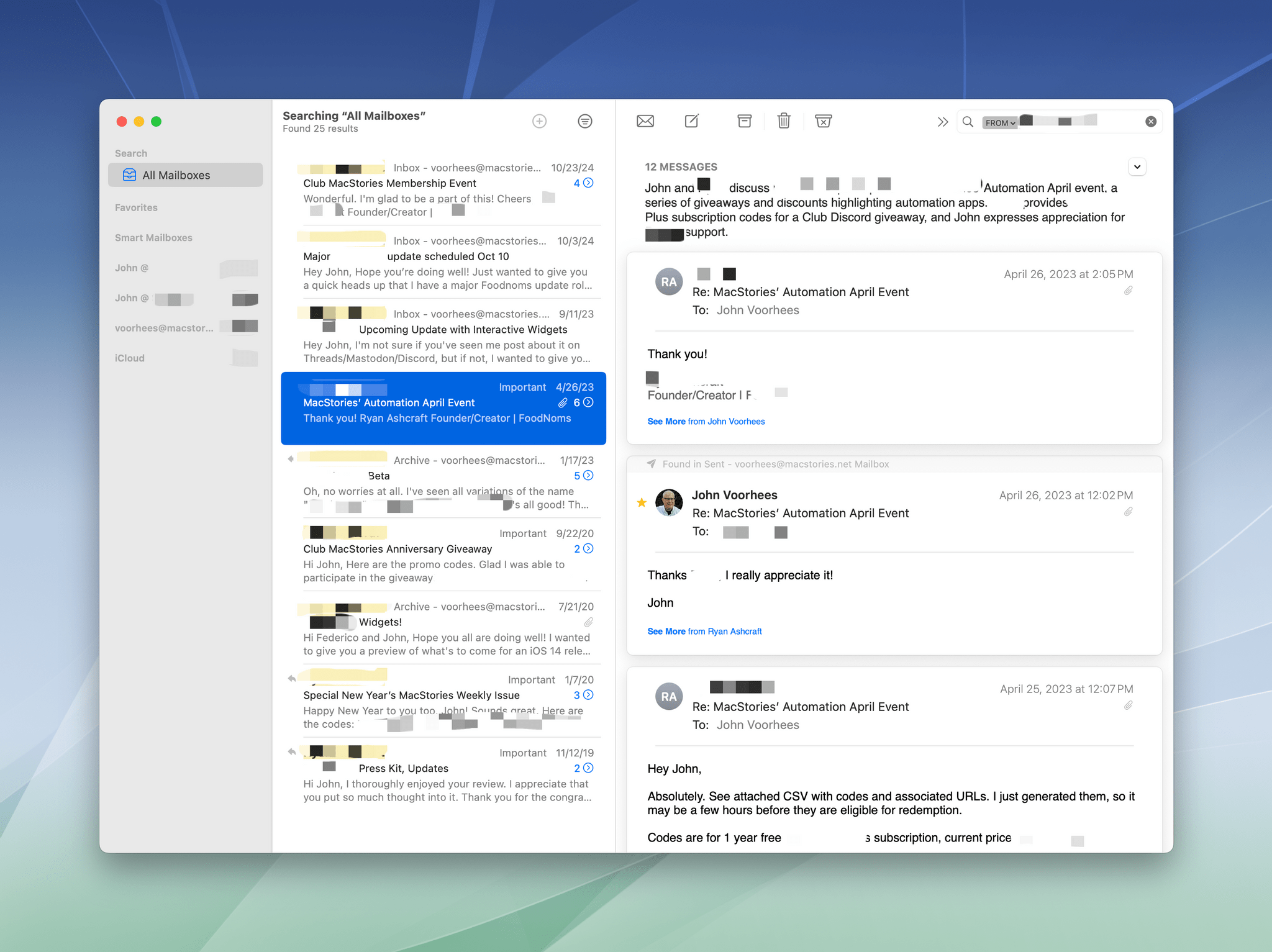

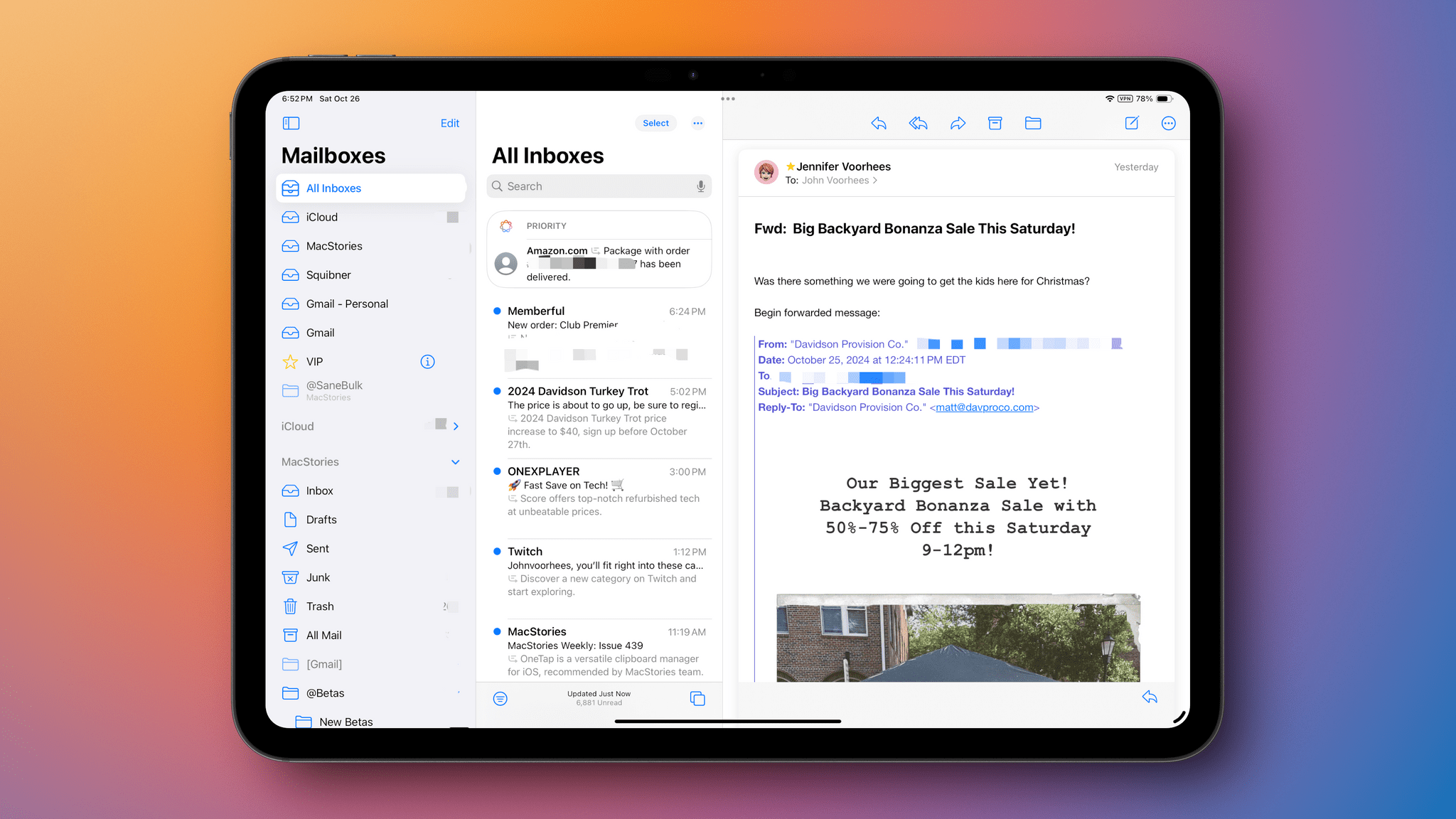

The Priority Inbox is a great concept, but more often than not, mine is full of Amazon delivery notifications and other messages I don’t care about.

Apple Intelligence’s Priority Inbox has made me realize something about my use of email that I never thought about. I believed that I relied on subjects and message previews much more than I actually did. In fact, I focus much more on whether a message is from a person and, if so, who they are when I’m deciding what is important.

The trouble with the Priority Inbox is that it doesn’t play by my personal email rules. It seems to be getting better over time, but for the longest time, not a single message from a person appeared in my Priority Inbox, which is exactly the opposite of what I want. If all the feature did was include messages from people I’ve exchanged messages with before, it would be vastly more useful. Instead, I’ve seen a lot of Amazon delivery notifications and other notification-type messages that just aren’t very important.

More recently, I’ve started to see a few people who I’ve corresponded with before filter into my Priority Inbox, which makes me optimistic that the feature might get to a more useful place eventually. However, it still isn’t consistent enough to rely on.

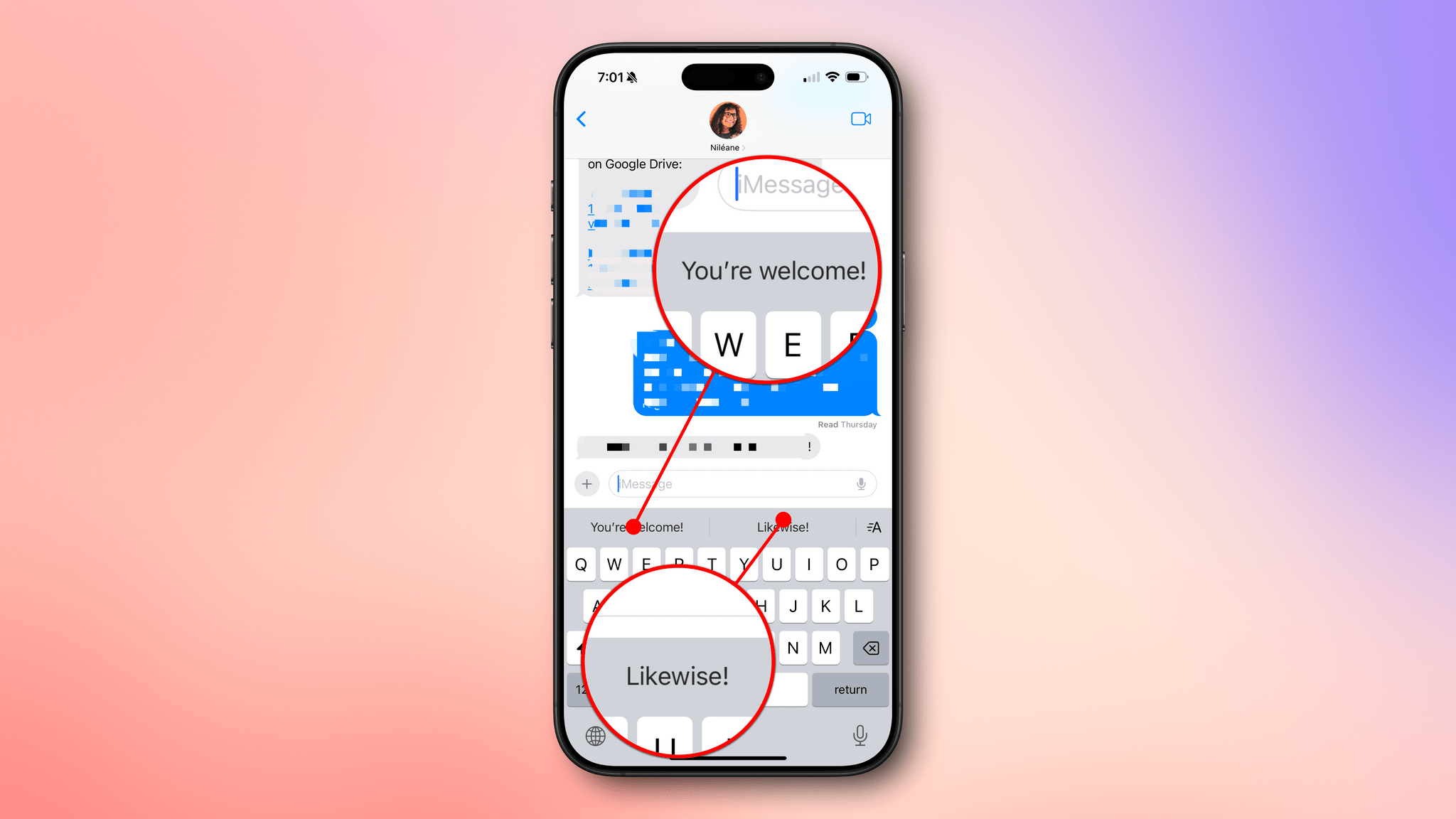

Mail, along with Messages, also offers quick response suggestions. Other email apps have had similar features that rely on templates for a long time, and they can be helpful. However, Apple Intelligence’s suggested responses are rarely what I’d write myself, even if I’m just dashing off a quick, “I’ll check it out,” or, “Thanks,“ to let someone know I’ve seen their message and will follow up. So until they get better, I’ll simply ignore suggested responses.

Finally, Mail can also summarize the contents of messages. I’m not a fan of article summaries in Safari, but they’re more useful in Mail. They are often a poor substitute for reading a long email, but they do give you the gist of what the message is about, which may be all you need in many cases.

Better yet, summaries also work with email threads, which is where I’ve found them most useful. Often, I’ll have multiple threads with the same person, and sifting through each to find what I’m looking for isn’t always easy, even using search. However, with summaries, I can quickly understand the topic of a long thread, which is helpful and something I will continue to use.

Summaries

Summaries drain nuance and meaning from text. Yet, summarization can also be a valuable tool if used wisely. Apple Intelligence does okay with summaries, but it could do better.

Notification Summaries

One of my favorite parts of using iOS 18.1 is reading the often hilarious summaries of Discord messages. Most of the messages in the MacStories Discord are short, and they’re often not carefully written. The results have entertained me and everyone on the team as we’ve shared the results with each other.

In other instances, notification summaries are more useful – like here, where I only really care about the most recent status change.

The summaries aren’t all funny, though. Sometimes, they’re just blunt and impersonal. I recall one where I came across as demanding and mean when the message I sent didn’t have that tone at all. Then there was the guy who got a summary of a text message breakup from his girlfriend, which is pretty brutal.

At times, notification summaries are useful, providing a quick way to catch up on key points, even if details are lost. The problem is that they are too often nonsensical or vague. Perhaps that will improve over time.

The contrast with Writing Tools here is interesting. Whereas Writing Tools will go out of their way to add extraneous friendly phrases that sometimes tip into sounding insincere, notification summaries ruthlessly cut all nuance. It makes me wonder if our communications are about to enter a strange era where every message is sent dripping with excess and read with brutalist efficiency.

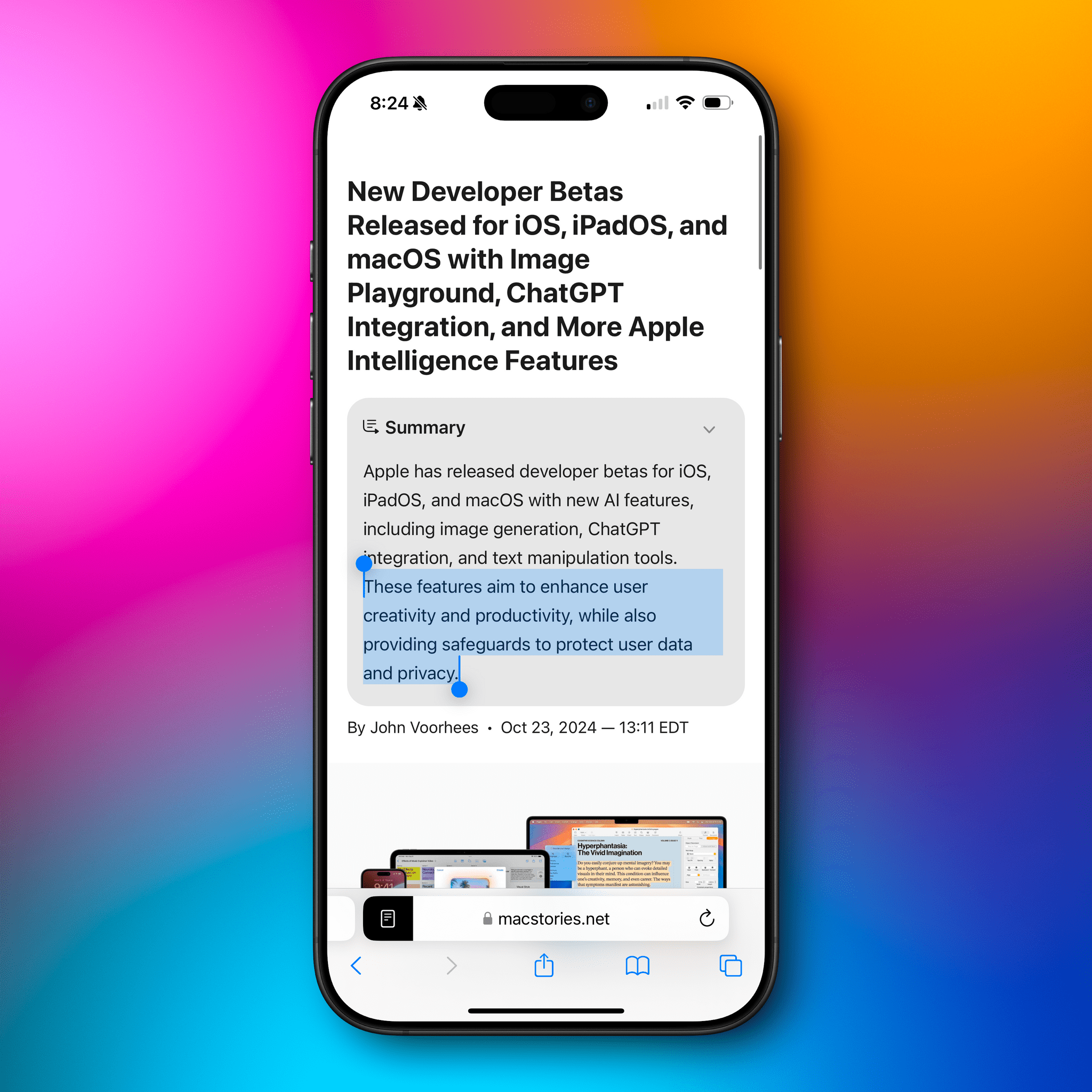

Safari Summaries

Most summaries are too brief to be useful except as a way to get a superficial idea of a story’s topic.

Safari includes a button to summarize articles when you’re in Reader mode. These summaries are so short that their utility is extremely limited. Here’s the summary of Federico’s iPad mini review:

The new iPad mini features the A17 Pro chip, 8GB of RAM, and Wi-Fi 6E, improving performance and Wi-Fi speeds. Despite its unchanged display and lack of Stage Manager support, the iPad mini remains a compelling companion device for media consumption and streaming. The iPad mini’s compact form factor makes it ideal for streaming games and other media-intensive activities.

It’s accurate, but it completely misses the notion of ‘The Third Place,’ which is the most interesting part of the story and what sets the review apart from others.

Summaries aren’t always accurate, either. Take this summary of my story about the iOS 18.2, iPad 18.2, and macOS 15.2 betas:

According to the summary, the article includes the notion that, “These features aim to enhance user creativity and productivity, while also providing safeguards to protect user data and privacy.“

Those ideas aren’t anywhere to be found in my story. So while summaries may provide a very high-level gist of articles, I wouldn’t rely on them for any more than that.

Apple Intelligence is still in its infancy. It’s a rougher mix of features than the company usually releases to the public, which I don’t like; but given where the tech industry is with AI, I’m not surprised that Apple didn’t wait to release them in beta form. Today’s features will undoubtedly evolve and improve quickly. Sometimes, that’s just how the tech industry goes.

Limitations aside, I think the largely on-device, privacy-first approach Apple is taking is the right one. I also like the way Apple has deeply integrated Apple Intelligence into its OSes and system apps.

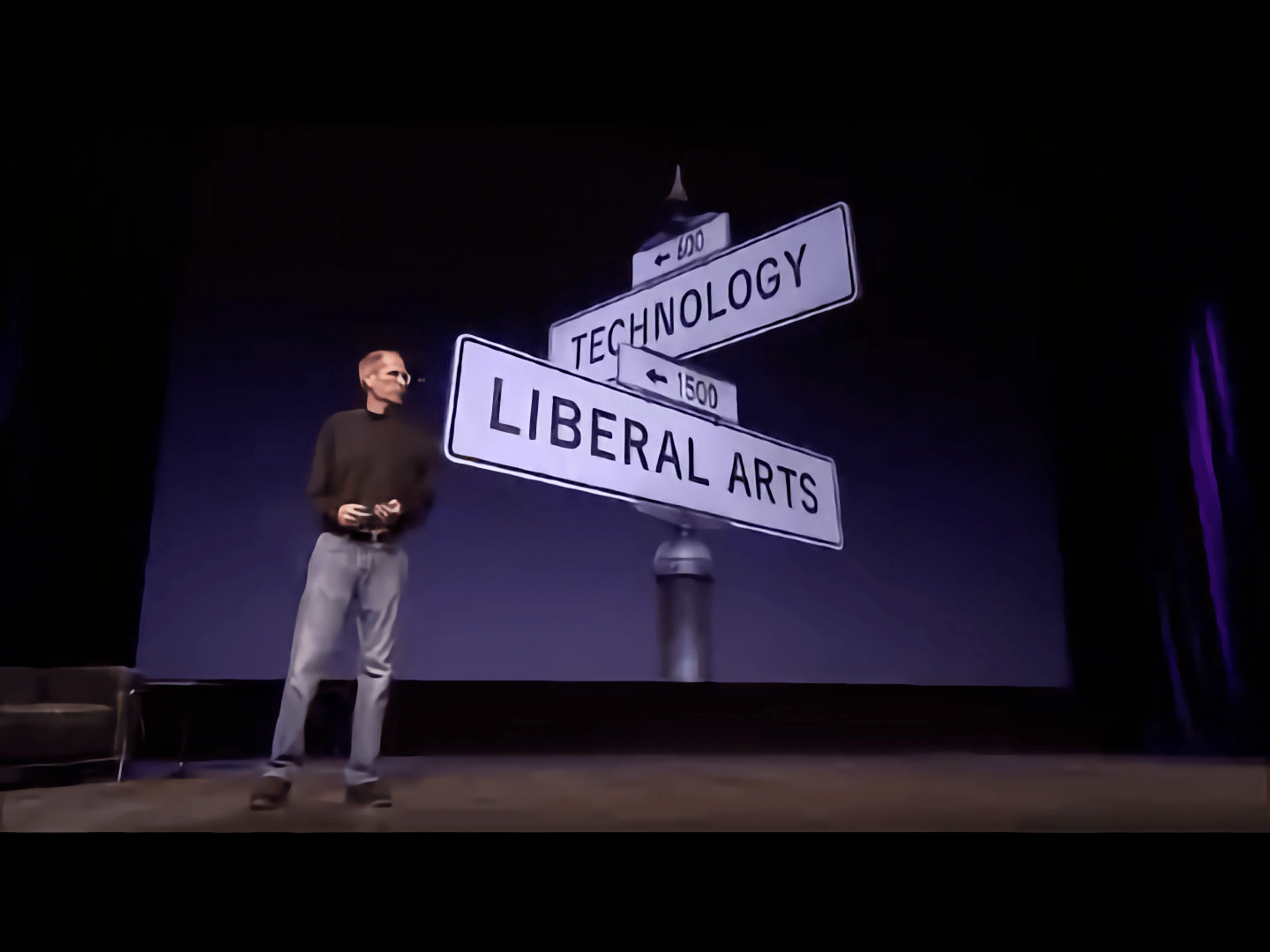

Still, I’ve never been more apprehensive about the company’s direction than I am now. Steve Jobs planted Apple at the intersection of technology and the liberal arts. That was more than just marketing fluff. The company was founded and thrived on the creative tension at that intersection, somehow managing to maintain a balance between the two.

With Apple Intelligence, I fear the scales have tipped too far in favor of technology at the expense of the liberal arts. AI has vast potential to do good in the world, but too often, it’s been used to drain humanity from whatever it touches. That’s antithetical to the values Apple has stood for historically, but even Apple mined the web for content to train its large language models with casual disregard for its creators, which gives me pause.

I want Apple to look at AI differently and challenge AI’s status quo, pushing the industry forward in a way that advances culture instead of undermining it. That’s a tall order, but shouldn’t that be the exact sort of goal that one of the biggest companies in the world with Apple’s heritage aims to achieve?

The launch of Apple Intelligence today will fade into another tick mark on technology’s long timeline. Apple Intelligence’s rough edges will be smoothed out and forgotten, and we’ll all move on to the next big thing. What’s important, though, is that these features are the first steps of one of the world’s biggest companies down a path that has profound consequences beyond the company’s quarterly earnings report. I wish I had a better feeling about and insight into where this is heading, but what I know for certain is that I’m here for it.